Difficulty Level: Intermediate

Imagine building an AI assistant that needs to work with your existing tools—database queries, file access, web APIs. Now imagine having to rebuild these integrations for every new AI model you want to try.

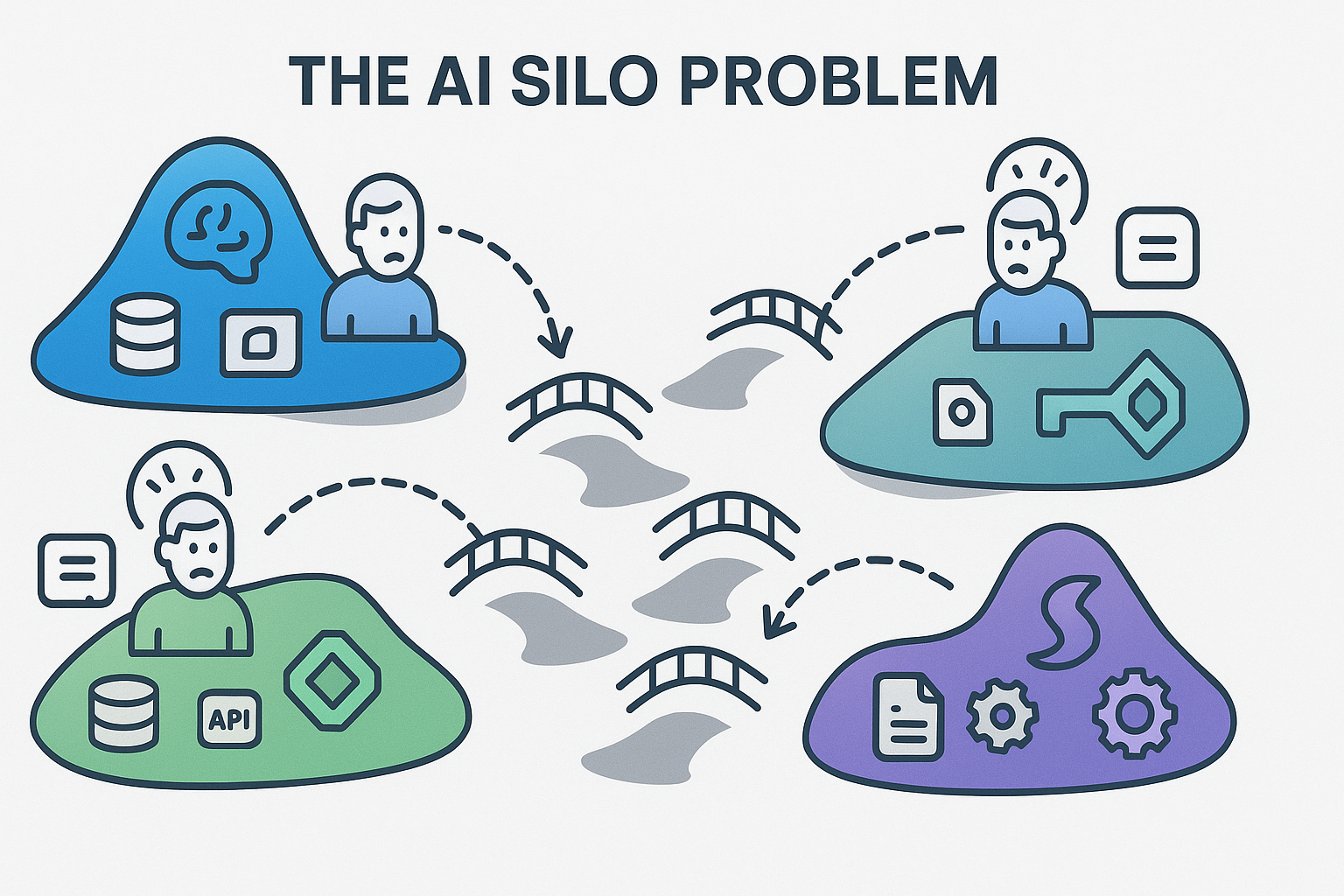

This is the reality developers face today. Each AI platform operates in its own silo, with proprietary APIs and incompatible tool definitions. Want to switch from GPT-4 to Claude? Rewrite everything. Want to test with open-source models? Start from scratch.

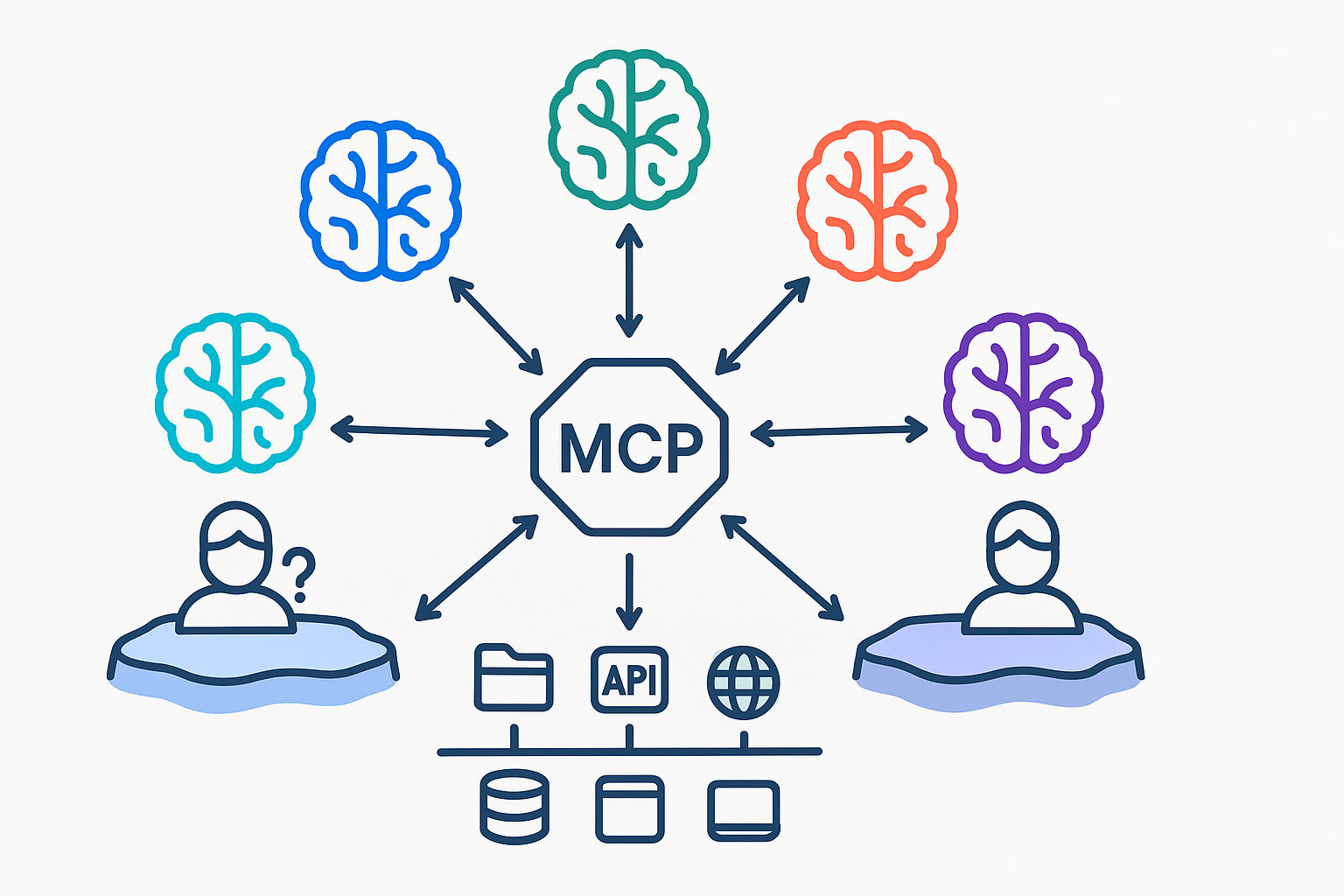

Model Context Protocol (MCP) solves this problem by creating interoperability between AI systems. You write your tools once and use them everywhere.

Quick Answer: MCP enables interoperability by providing a universal protocol that any AI model can use to connect with any tool or service. Instead of platform-specific integrations, MCP creates a common language for AI-tool communication.

What You’ll Learn

- Understanding AI silos and their impact on development

- How MCP enables true interoperability between AI systems

- Real examples of cross-platform AI integration

- The technical architecture that makes it possible

- Building your first interoperable AI tool

The AI Silo Problem Explained (MCP vs Platform-Specific APIs)

Every major AI platform built its own walled garden. It’s like the smartphone wars, but worse - at least phones could still call each other.

The Current Landscape of Isolation

OpenAI’s Function Calling: Powerful and well-documented, but completely tied to their ecosystem. Your carefully crafted functions only work with GPT models. Want to use them elsewhere? Too bad.

Google’s Extensions: Scattered across different products (Vertex AI, Gemini, AI Studio) with inconsistent implementations. Even within Google’s ecosystem, portability is a challenge.

Anthropic’s Tool Use: Clean and effective, but Claude-specific. Those elegant tool definitions you wrote? They’re locked to one platform.

Open Source Models: Each has its own approach. LangChain tries to abstract this mess, but you still end up writing platform-specific code underneath.

The Real Cost of Silos

This fragmentation wastes time and money:

- Duplicate Development: The same database query tool written 4 different ways

- Maintenance Nightmare: Update your API? Now update 4 different integrations

- Vendor Lock-in: Switching AI providers means rewriting everything

- Innovation Barriers: Time spent on integration is time not spent on features

- Testing Complexity: Each platform needs separate test suites

I experienced this firsthand building AI features for VX Therapy, our VR mental health platform. We wanted therapists to query session data using natural language. Three AI platforms later, we had three completely different implementations of the same feature. When we needed to update the database schema, it took a week to update all integrations.

Enter MCP: The Universal Translator

MCP fixes this mess. Instead of each AI platform having its own way to connect to tools, MCP gives us one standard protocol everyone can use.

It’s like electrical outlets. Different countries use different plugs and voltages, but universal adapters work everywhere. Same idea - MCP is the universal adapter for AI tools.

From Platform-Specific to Protocol-Based

It’s a big change:

Before MCP: Each AI platform is an island with its own rules, formats, and quirks. Developers build bridges between specific islands, but those bridges can’t be reused.

With MCP: A common protocol that any AI platform can speak. Build your tool once as an MCP server, and any MCP-compatible AI can use it immediately.

What MCP Gets You

Write Once, Use Everywhere: Your database query tool works with Claude today, GPT-4 tomorrow, and whatever AI model launches next year.

True Model Comparison: Test different AI models on the same task without rewriting integrations. Finally, you can choose based on actual performance, not integration convenience.

Future-Proof Development: When new AI models support MCP, your tools work right away. No waiting for updates or writing new adapters.

Simplified Architecture: One integration layer instead of many. Your codebase stays clean and maintainable.

How Interoperability Works

MCP makes tools work everywhere through three simple design choices:

The Three Pillars of MCP Interoperability

1. Standardized Message Format (JSON-RPC)

MCP uses JSON-RPC, a simple, proven protocol for remote procedure calls. Every message follows the same structure, whether it’s coming from Claude, adapted from GPT-4, or a local model:

{

"jsonrpc": "2.0",

"method": "tools/call",

"params": {

"name": "read_file",

"arguments": {

"path": "/data/report.txt"

}

},

"id": 1

}

This isn’t proprietary to any AI company—it’s an open standard used across the tech industry.

2. Universal Tool Definitions

Tools in MCP are defined using a consistent schema that any AI can understand:

@mcp_server.tool()

async def read_file(path: str) -> str:

"""Read contents of a file.

Args:

path: Path to the file to read

Returns:

Contents of the file as a string

"""

with open(path, 'r') as f:

return f.read()

This one definition works everywhere MCP is supported. No platform-specific code, no proprietary formats.

3. Protocol-Based Communication

Instead of API-specific methods, MCP uses simple message types. The AI and your tools talk through:

- Discovery: “What tools do you have?”

- Invocation: “Please run this tool with these parameters”

- Results: “Here’s what the tool returned”

- Errors: “Something went wrong, here’s why”

MCP vs Traditional Integration: Code Comparison

Here’s the difference with real examples from each platform’s official API:

Traditional Approach - Platform-Specific Implementations:

OpenAI Function Calling

from openai import OpenAI

client = OpenAI(api_key="your-api-key")

# OpenAI uses the 'tools' parameter (functions is deprecated)

tools = [

{

"type": "function",

"function": {

"name": "query_database",

"description": "Execute a SQL query on the database",

"parameters": {

"type": "object",

"properties": {

"query": {

"type": "string",

"description": "The SQL query to execute"

}

},

"required": ["query"]

}

}

}

]

# Make the API call

response = client.chat.completions.create(

model="gpt-4-turbo",

messages=[

{"role": "user", "content": "Get all users created this month"}

],

tools=tools,

tool_choice="auto" # Let the model decide when to use tools

)

# Handle tool calls

if response.choices[0].message.tool_calls:

for tool_call in response.choices[0].message.tool_calls:

if tool_call.function.name == "query_database":

# Execute the actual database query

args = json.loads(tool_call.function.arguments)

result = execute_query(args["query"])

Google Gemini Function Calling

import google.generativeai as genai

genai.configure(api_key="your-api-key")

# Gemini uses FunctionDeclaration objects

query_database = genai.FunctionDeclaration(

name="query_database",

description="Execute a SQL query on the database",

parameters={

"type": "object",

"properties": {

"query": {

"type": "string",

"description": "The SQL query to execute"

}

},

"required": ["query"]

}

)

# Create the model with tools

model = genai.GenerativeModel(

model_name="gemini-2.0-flash",

tools=[query_database]

)

# Start a chat session

chat = model.start_chat()

response = chat.send_message("Get all users created this month")

# Handle function calls

for part in response.parts:

if part.function_call:

if part.function_call.name == "query_database":

# Execute the query

result = execute_query(part.function_call.args["query"])

# Send the result back to continue the conversation

response = chat.send_message(

genai.Content(

parts=[genai.Part(

function_response=genai.FunctionResponse(

name="query_database",

response={"result": result}

)

)]

)

)

Anthropic Claude Tool Use

import anthropic

client = anthropic.Anthropic(api_key="your-api-key")

# Claude uses 'tools' with 'input_schema'

tools = [

{

"name": "query_database",

"description": "Execute a SQL query on the database",

"input_schema": {

"type": "object",

"properties": {

"query": {

"type": "string",

"description": "The SQL query to execute"

}

},

"required": ["query"]

}

}

]

# Make the API call

response = client.messages.create(

model="claude-4-opus-latest",

max_tokens=1024,

messages=[

{"role": "user", "content": "Get all users created this month"}

],

tools=tools

)

# Handle tool use

if response.stop_reason == "tool_use":

for content in response.content:

if content.type == "tool_use":

if content.name == "query_database":

# Execute the query

result = execute_query(content.input["query"])

# Continue the conversation with the result

response = client.messages.create(

model="claude-4-opus-latest",

max_tokens=1024,

messages=[

{"role": "user", "content": "Get all users created this month"},

{"role": "assistant", "content": response.content},

{"role": "user", "content": [{

"type": "tool_result",

"tool_use_id": content.id,

"content": str(result)

}]}

]

)

Key Differences Between Platforms:

Schema Format:

- OpenAI:

tools[].function.parameters - Gemini:

FunctionDeclaration.parameters - Claude:

tools[].input_schema

- OpenAI:

Response Handling:

- OpenAI:

response.choices[0].message.tool_calls - Gemini:

response.parts[].function_call - Claude:

response.content[].type == "tool_use"

- OpenAI:

Continuation Pattern:

- OpenAI: Add function response to messages

- Gemini: Use

function_responsepart - Claude: Use

tool_resultcontent type

Naming Conventions:

- Each platform has slightly different parameter names and structures

- Error handling differs across platforms

- Authentication methods vary

MCP Approach - One Implementation for All:

# Universal MCP Implementation

from mcp.server.fastmcp import FastMCP

import sqlite3

mcp = FastMCP("database_tools")

@mcp.tool()

async def query_database(query: str) -> dict:

"""Execute a database query.

Args:

query: SQL query to execute

Returns:

Query results as a dictionary

"""

try:

conn = sqlite3.connect("database.db")

cursor = conn.cursor()

cursor.execute(query)

results = cursor.fetchall()

conn.close()

return {"success": True, "data": results}

except Exception as e:

return {"success": False, "error": str(e)}

# This single implementation works with:

# ✓ Claude (native MCP support)

# ✓ GPT-4 (via MCP adapter)

# ✓ Gemini (via MCP adapter)

# ✓ Local models (via MCP bridge)

# ✓ Any future AI with MCP support

The traditional approach requires learning each platform’s SDK, handling their specific authentication, managing different error formats, and maintaining multiple codebases. With MCP, you write once and focus on your actual functionality.

Real-World Example: MCP Server Tutorial 2025

Let’s build a complete, runnable MCP server that you can test today. This example shows how MCP enables tool interoperability.

Step 1: Create the MCP Server

First, install the MCP SDK with FastMCP included:

pip install mcp[cli] # Includes FastMCP as part of the official SDK

Then create your MCP server using FastMCP (included in the MCP package):

from typing import Any

import os

from mcp.server.fastmcp import FastMCP

# Initialize FastMCP server

mcp = FastMCP("file_tools")

@mcp.tool()

async def read_file(path: str) -> str:

"""Read contents of a file.

Args:

path: Path to the file to read

Returns:

Contents of the file as a string

"""

try:

with open(path, 'r', encoding='utf-8') as f:

return f.read()

except FileNotFoundError:

return f"Error: File {path} not found"

except Exception as e:

return f"Error reading file: {str(e)}"

@mcp.tool()

async def list_files(directory: str = ".") -> list[str]:

"""List files in a directory.

Args:

directory: Directory path (default: current directory)

Returns:

List of filenames in the directory

"""

try:

files = os.listdir(directory)

return [f for f in files if os.path.isfile(os.path.join(directory, f))]

except Exception as e:

return [f"Error: {str(e)}"]

# Run the server

if __name__ == "__main__":

# FastMCP handles the server setup automatically

import asyncio

asyncio.run(mcp.run())

Step 2: Complete Working Example

Here’s a full example you can run today. Save this as mcp_demo_server.py:

#!/usr/bin/env python3

"""MCP Demo Server - A complete example you can run and test"""

from typing import Any

import os

import json

from datetime import datetime

from mcp.server.fastmcp import FastMCP

# Initialize FastMCP server

mcp = FastMCP("demo_tools")

@mcp.tool()

async def get_time(timezone: str = "UTC") -> str:

"""Get the current time in a specific timezone.

Args:

timezone: Timezone name (e.g., 'UTC', 'EST', 'PST')

Returns:

Current time as a formatted string

"""

from datetime import datetime

import pytz

try:

tz = pytz.timezone(timezone)

now = datetime.now(tz)

return now.strftime("%Y-%m-%d %H:%M:%S %Z")

except:

return f"Error: Unknown timezone '{timezone}'"

@mcp.tool()

async def calculate(expression: str) -> str:

"""Evaluate a mathematical expression.

Args:

expression: Mathematical expression to evaluate

Returns:

Result of the calculation

"""

try:

# Safe evaluation of mathematical expressions

allowed_names = {

"abs": abs, "round": round, "min": min, "max": max,

"sum": sum, "pow": pow, "len": len

}

result = eval(expression, {"__builtins__": {}}, allowed_names)

return str(result)

except Exception as e:

return f"Error: {str(e)}"

@mcp.tool()

async def create_file(filename: str, content: str) -> str:

"""Create a new file with the given content.

Args:

filename: Name of the file to create

content: Content to write to the file

Returns:

Success or error message

"""

try:

# Safety check - only allow files in current directory

if "/" in filename or "\\" in filename:

return "Error: Please use simple filenames only"

with open(filename, 'w', encoding='utf-8') as f:

f.write(content)

return f"Successfully created {filename}"

except Exception as e:

return f"Error creating file: {str(e)}"

@mcp.tool()

async def list_directory(path: str = ".") -> list[str]:

"""List files in a directory.

Args:

path: Directory path (default: current directory)

Returns:

List of files and directories

"""

try:

items = os.listdir(path)

return sorted(items)

except Exception as e:

return [f"Error: {str(e)}"]

# Run the server

if __name__ == "__main__":

print("Starting MCP Demo Server...")

print("Available tools:")

print(" - get_time: Get current time in any timezone")

print(" - calculate: Evaluate mathematical expressions")

print(" - create_file: Create a new file")

print(" - list_directory: List files in a directory")

print("\nPress Ctrl+C to stop the server")

import asyncio

asyncio.run(mcp.run())

Step 3: Configure Claude Desktop

Claude Desktop configuration paths by platform:

macOS: ~/Library/Application Support/Claude/claude_desktop_config.json

Windows: %APPDATA%\Claude\claude_desktop_config.json

Linux: ~/.config/Claude/claude_desktop_config.json

Example Configuration:

{

"mcpServers": {

"file_tools": {

"command": "python",

"args": ["path/to/mcp_file_tools.py"]

}

}

}

Step 4: Test Your MCP Server

Testing with Claude Desktop:

- Save your MCP server as

file_tools.py - Update your Claude Desktop config (path varies by OS - see above)

- Restart Claude Desktop

- In Claude, you should see your tools available

- Test by asking: “Can you list files in the current directory?”

Testing Locally (Command Line):

# Run your MCP server

python file_tools.py

# In another terminal, test with the MCP CLI

mcp test stdio "python file_tools.py"

# This will show available tools and let you test them interactively

Integration with Other AI Platforms:

While MCP currently has native support in Claude Desktop, the community is building adapters for other platforms:

- OpenAI GPT-4: Community adapter in development

- Google Gemini: Bridge implementation planned

- Local Models: LangChain integration available

Check the MCP GitHub repository for the latest adapter implementations.

Note: MCP is a new protocol (launched November 2024). Native support and adapters for non-Anthropic platforms are actively being developed by the community.

The best part? Your MCP server stays the same. It doesn’t matter which AI model calls it - it just responds to standard MCP messages.

The Network Effect of Standards

MCP’s real value comes from network effects - the same thing that made HTTP, USB, and Bluetooth work everywhere.

Historical Parallels That Worked

HTTP (1991): Tim Berners-Lee could’ve made the web proprietary. He made it a standard instead. Now we have the entire internet.

USB (1996): Before USB, every device had its own connector. The standard didn’t make anyone rich directly, but it made the whole industry better.

Bluetooth (1998): Ericsson could’ve kept it proprietary. They made it a standard and now everything connects wirelessly.

How Standards Create Exponential Value

When MCP becomes the standard:

- More Tools Available: Every tool built for one AI works for all

- Faster Innovation: Developers focus on features, not integration

- Reduced Costs: One integration instead of many

- True Competition: AI models compete on quality, not lock-in

The benefits add up:

- Developer builds MCP tool → Works with all AI models

- More tools available → More developers use MCP

- Bigger ecosystem → AI platforms have to support MCP

- Everyone uses it → It becomes the standard

It’s already happening. Tools built for Claude work with GPT-4 through adapters. Open-source projects are adding MCP support. The snowball is rolling.

For businesses like ours building AI products (SpinePlanner for AR surgery, Curio XR for education), we can pick the best AI model for each job without integration headaches. That’s huge.

Frequently Asked Questions (MCP vs LangChain, OpenAI, Gemini)

What does AI interoperability mean?

AI interoperability means different AI models can use the same tools without separate integrations. With MCP, you write your tool once and it works with Claude, GPT-4, Gemini, and any other MCP-compatible model. Like how any browser can visit any website.

MCP vs LangChain: What’s the Difference?

MCP is a protocol, not a library or framework. LangChain and similar tools just wrap different AI APIs - you still write platform-specific code underneath. MCP creates a standard that AI platforms implement directly. Real interoperability, not just code wrappers.

Can MCP work with any AI model?

MCP has native support in Claude Desktop right now. For other AI models:

- OpenAI/GPT-4: Community adapters being built (check MCP GitHub)

- Google Gemini: Bridges coming soon

- Local Models: Works with LangChain or custom bridges

- Future Support: Any AI provider can add MCP support

The protocol works with everything in theory, but actual support depends on the community and AI companies. Check the MCP docs for what’s available today.

What are the benefits for developers?

Developers save 70-80% of integration time by writing tools once. You can switch AI providers without rewriting code, test different models fairly, and future-proof your apps. When new AI models support MCP, your tools work right away.

What’s Next

MCP’s interoperability is just the beginning. The protocol handles much more than simple tool calls—it manages context, sessions, and complex multi-step workflows.

Coming up next: “How MCP Handles Context Exchange Between AI Models” - where we’ll explore how MCP maintains conversation context, shares state between tools, and enables sophisticated AI workflows that span multiple models and sessions.

Try It Yourself

Ready to break free from AI silos? Here’s how to get started:

- Install MCP:

pip install mcp[cli] - Save the demo server above as

mcp_demo_server.py - Configure Claude Desktop with the server (see Step 3 above)

- Test in Claude: “What time is it in Tokyo?” or “Calculate 2^10”

For more examples and documentation:

Want to learn more about MCP? Check out our blog for AI development tips, or contact us if you’re building with MCP and need help.

This is article 4 of 24 in our MCP series. We’re documenting the protocol that’s changing AI development, from basics to advanced stuff.

About Angry Shark Studio

Angry Shark Studio is a professional Unity AR/VR development studio specializing in mobile multiplatform applications and AI solutions. Our team includes Unity Certified Expert Programmers with extensive experience in AR/VR development.

Related Articles

More Articles

Explore more insights on Unity AR/VR development, mobile apps, and emerging technologies.

View All Articles