You’ve been working with Claude for an hour, building up context about your codebase. Now you need GPT-4’s different perspective. Time to copy… paste… explain everything again… and watch half the context get lost in translation.

Sound familiar? If you’ve ever tried to work with multiple AI models on the same project, you know this dance. Each conversation starts from scratch. Each model needs the same explanations. By the time you’ve brought the second model up to speed, you’ve forgotten what you were trying to accomplish in the first place.

Here’s the deal: MCP context management lets different AI models share conversation history, tool outputs, and accumulated knowledge automatically. No more copy-paste marathons or repeated explanations. Learn more about MCP’s session persistence for long-running conversations.

What We’re Building Today

Forget abstract explanations. Let’s build something real: a research assistant that uses three different AI models, each doing what it’s best at. The official MCP documentation provides the full protocol specification:

- Claude: Analyzes code structure and architecture

- GPT-4: Suggests improvements and optimizations

- Gemini: Double-checks for bugs and edge cases

The key feature? Each model builds on what the previous one discovered. No repeated context. No lost information. Just automated coordination.

Here’s what the end result looks like:

$ python research_assistant.py analyze main.py

Claude: I've analyzed the code structure. Found 3 classes, 12 methods...

GPT-4: Based on Claude's analysis, I suggest refactoring the DataProcessor class...

Gemini: I've reviewed both analyses. Found 2 potential race conditions...

Understanding Context (Without the Jargon)

Before diving into code, let’s clarify what “context” actually means in AI conversations:

Context = Everything the AI knows about your current task

This includes:

- Your conversation history

- Results from any tools the AI used

- Files it read or analyzed

- Decisions it made along the way

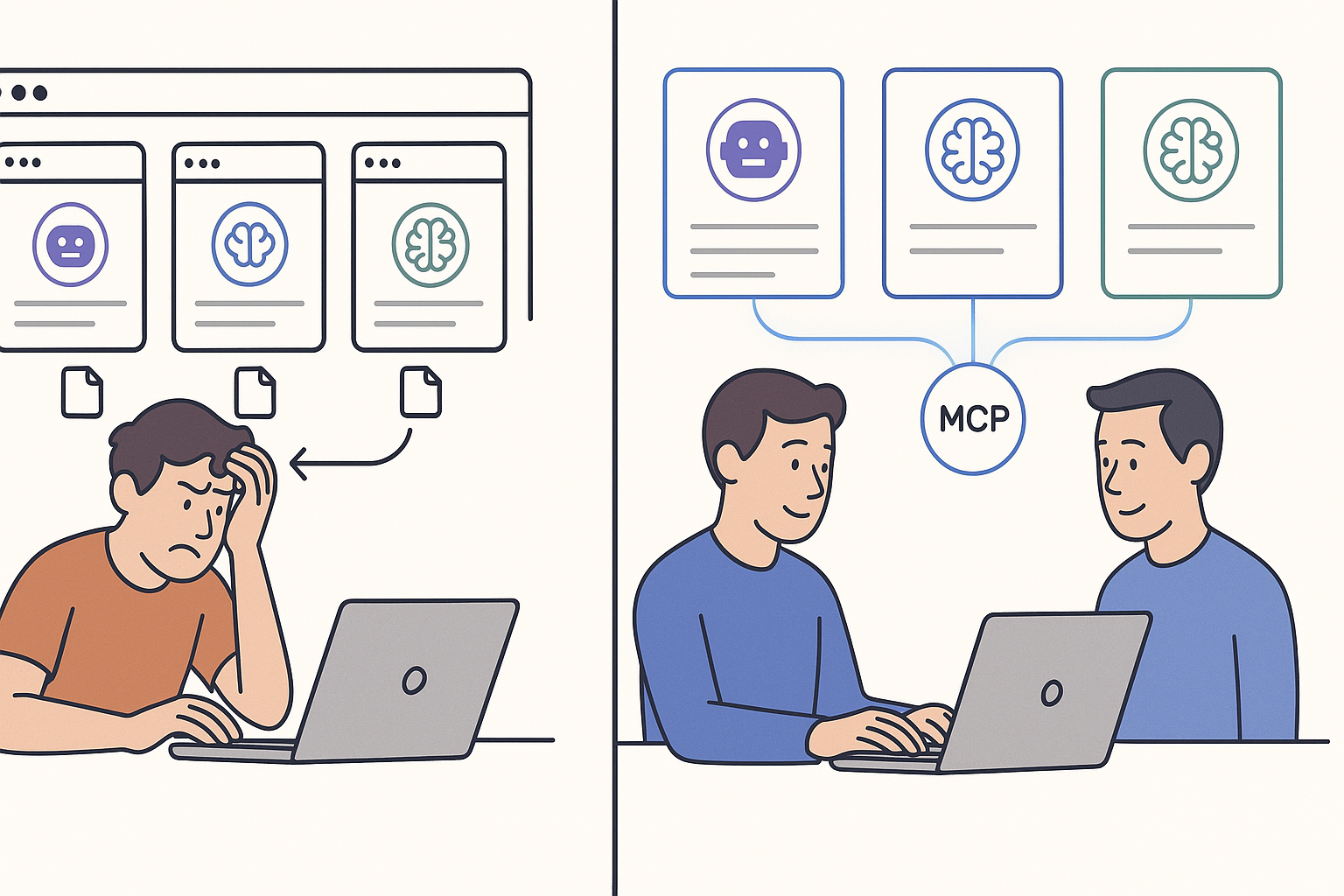

The Traditional Way: Manual Context Transfer

Without MCP, sharing context between models looks like this:

- Have conversation with Model A

- Copy relevant parts

- Open Model B’s interface

- Paste and reformat

- Hope nothing important got lost

- Repeat for Model C

It’s not just tedious—it’s error-prone. Important details get missed. Formatting breaks. And you spend more time managing conversations than solving problems.

The MCP Way: Automatic Context Sharing

With MCP context exchange:

- Have conversation with Model A

- Model B automatically receives the context

- Model B builds on Model A’s work

- Model C gets everything from both

- You focus on your actual problem

Building Our Multi-Model Assistant

Let’s build this step by step. We’ll use modern Python tooling to keep things clean and fast.

Setting Up Your Environment

First, let’s use uv for package management (it’s faster and more reliable than pip):

# Install uv if you haven't already

curl -LsSf https://astral.sh/uv/install.sh | sh

# Create new project

mkdir mcp-context-demo

cd mcp-context-demo

# Initialize project

uv init

uv add mcp anthropic openai google-generativeai python-dotenv

Create a .env file for your API keys:

ANTHROPIC_API_KEY=your_claude_key_here

OPENAI_API_KEY=your_openai_key_here

GOOGLE_API_KEY=your_gemini_key_here

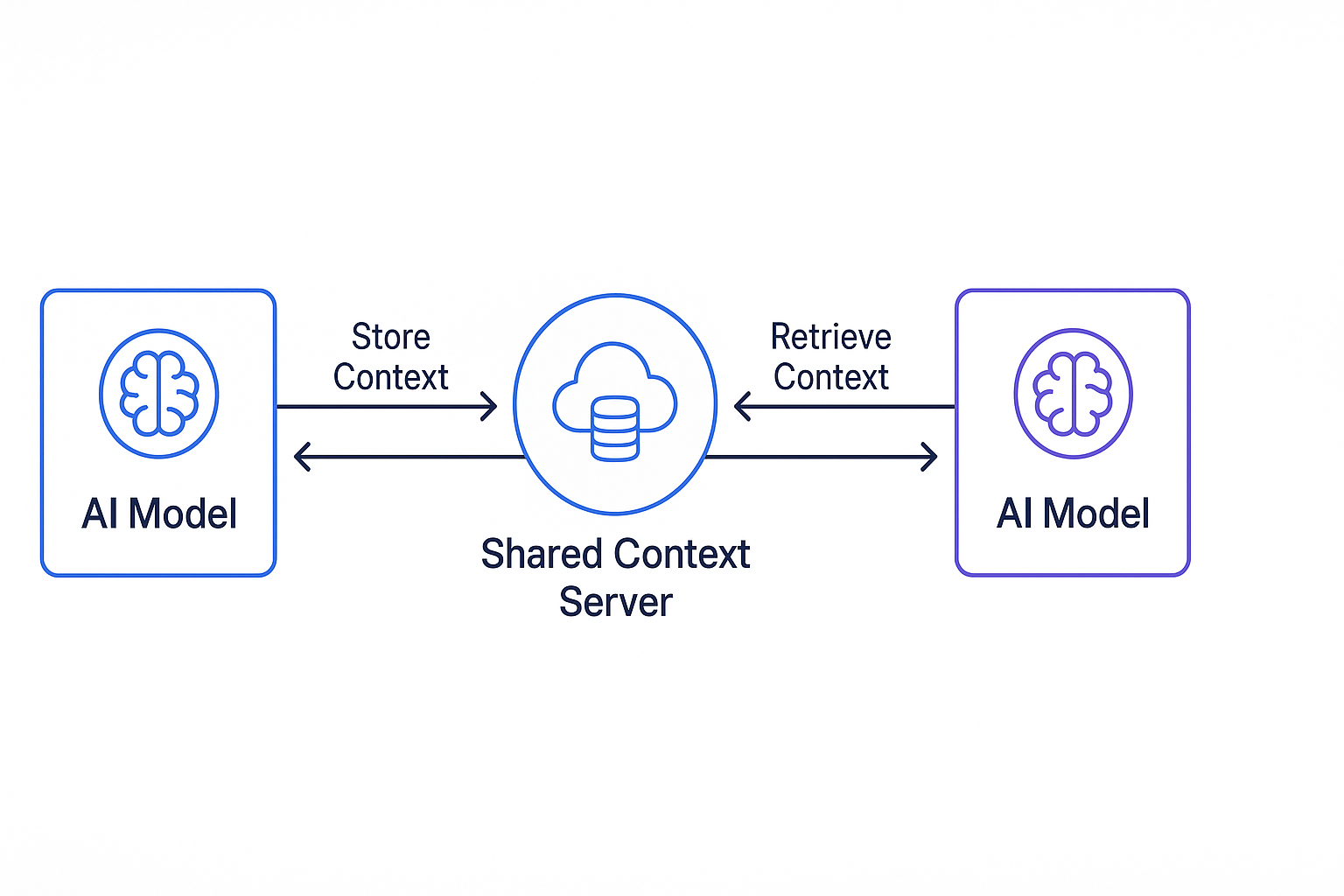

The Context Server

Here’s our MCP server that manages context between models:

# context_server.py

from datetime import datetime

from typing import Dict, List, Any, Optional

import json

import asyncio

from dataclasses import dataclass, asdict, field

@dataclass

class ContextEntry:

"""Represents a single context entry from a model"""

model: str

timestamp: str

content: Dict[str, Any]

@dataclass

class SharedContext:

"""Manages shared context between models"""

conversations: List[ContextEntry] = field(default_factory=list)

tool_outputs: List[Dict[str, Any]] = field(default_factory=list)

discovered_info: Dict[str, Any] = field(default_factory=dict)

class ContextServer:

"""MCP server for managing context exchange between AI models"""

def __init__(self):

self.shared_context = SharedContext()

self._lock = asyncio.Lock()

async def store_context(self, model: str, context: Dict[str, Any]) -> None:

"""Store context from one model for others to use"""

async with self._lock:

entry = ContextEntry(

model=model,

timestamp=datetime.now().isoformat(),

content=context

)

self.shared_context.conversations.append(entry)

# Extract key information for quick access

if "discoveries" in context:

self.shared_context.discovered_info.update(context["discoveries"])

# Store tool outputs if present

if "tool_outputs" in context:

self.shared_context.tool_outputs.extend(context["tool_outputs"])

async def get_context(self, for_model: str, max_entries: int = 10) -> Dict[str, Any]:

"""Retrieve relevant context for a specific model"""

async with self._lock:

# Get recent conversations from other models

other_conversations = [

conv for conv in self.shared_context.conversations

if conv.model != for_model

][-max_entries:]

# Format context for consumption

relevant_context = {

"previous_analysis": self._format_previous_analysis(other_conversations),

"key_findings": dict(self.shared_context.discovered_info),

"recent_tool_outputs": self.shared_context.tool_outputs[-10:]

}

return relevant_context

def _format_previous_analysis(self, conversations: List[ContextEntry]) -> List[Dict[str, Any]]:

"""Format previous analysis for easy consumption"""

formatted = []

for conv in conversations:

formatted.append({

"model": conv.model,

"timestamp": conv.timestamp,

"summary": conv.content.get("summary", ""),

"findings": conv.content.get("findings", []),

"suggestions": conv.content.get("suggestions", [])

})

return formatted

async def clear_context(self) -> None:

"""Clear all stored context"""

async with self._lock:

self.shared_context = SharedContext()

The Multi-Model Client

Now let’s create the client that orchestrates our three models:

# research_assistant.py

import os

import asyncio

from typing import Dict, Any, Optional

from pathlib import Path

from dotenv import load_dotenv

# AI model imports

from anthropic import AsyncAnthropic

from openai import AsyncOpenAI

import google.generativeai as genai

# Our context server

from context_server import ContextServer

# Load environment variables

load_dotenv()

class ResearchAssistant:

"""Multi-model AI research assistant with context sharing"""

def __init__(self):

# Initialize models

self.claude = AsyncAnthropic(api_key=os.getenv("ANTHROPIC_API_KEY"))

self.openai = AsyncOpenAI(api_key=os.getenv("OPENAI_API_KEY"))

# Configure Gemini

genai.configure(api_key=os.getenv("GOOGLE_API_KEY"))

# Note: Use gemini-1.5-flash for free tier, or gemini-1.5-pro for better quality

self.gemini = genai.GenerativeModel('gemini-1.5-flash')

# Initialize context server

self.context_server = ContextServer()

async def analyze_code(self, file_path: str) -> Dict[str, Any]:

"""Analyze code using all three models collaboratively"""

# Read the file

try:

with open(file_path, 'r') as f:

code_content = f.read()

except FileNotFoundError:

return {"error": f"File {file_path} not found"}

# Step 1: Claude analyzes structure

print("Claude: Analyzing code structure...")

claude_analysis = await self._claude_analyze(code_content, file_path)

await self.context_server.store_context("claude", claude_analysis)

print(f"Claude: {claude_analysis['summary']}")

# Step 2: GPT-4 suggests improvements based on Claude's analysis

print("\nGPT-4: Suggesting improvements...")

gpt_context = await self.context_server.get_context("gpt4")

gpt_improvements = await self._gpt4_improve(code_content, file_path, gpt_context)

await self.context_server.store_context("gpt4", gpt_improvements)

print(f"GPT-4: {gpt_improvements['summary']}")

# Step 3: Gemini checks for issues using both previous analyses

print("\nGemini: Checking for potential issues...")

gemini_context = await self.context_server.get_context("gemini")

gemini_review = await self._gemini_review(code_content, file_path, gemini_context)

await self.context_server.store_context("gemini", gemini_review)

print(f"Gemini: {gemini_review['summary']}")

return {

"file": file_path,

"structure": claude_analysis,

"improvements": gpt_improvements,

"issues": gemini_review,

"context_used": True

}

async def _claude_analyze(self, code: str, filename: str) -> Dict[str, Any]:

"""Use Claude to analyze code structure"""

prompt = f"""Analyze the structure of this {filename} file. Focus on:

1. Overall architecture and design patterns

2. Class and function organization

3. Key components and their relationships

Code:

{code}

Provide a structured analysis with findings and discoveries."""

response = await self.claude.messages.create(

model="claude-3-5-sonnet-latest", # Using latest Claude model

max_tokens=1000,

messages=[{"role": "user", "content": prompt}]

)

# Parse Claude's response into structured format

analysis = {

"summary": f"Found {code.count('class ')} classes and {code.count('def ')} functions",

"findings": [

"Code structure analyzed",

f"File: {filename}",

f"Lines of code: {len(code.splitlines())}"

],

"discoveries": {

"classes": code.count('class '),

"functions": code.count('def '),

"imports": code.count('import ')

},

"raw_analysis": response.content[0].text

}

return analysis

async def _gpt4_improve(self, code: str, filename: str, context: Dict[str, Any]) -> Dict[str, Any]:

"""Use GPT-4 to suggest improvements based on context"""

# Build context-aware prompt

previous_analysis = context.get("previous_analysis", [])

claude_findings = previous_analysis[0] if previous_analysis else {}

prompt = f"""Based on the previous structural analysis by Claude:

{claude_findings}

Now analyze this code for potential improvements:

1. Performance optimizations

2. Code quality improvements

3. Best practices violations

Code:

{code}

Focus on actionable suggestions that build on Claude's structural analysis."""

response = await self.openai.chat.completions.create(

model="gpt-4-turbo-preview",

messages=[{"role": "user", "content": prompt}],

max_tokens=1000

)

# Structure the response

improvements = {

"summary": "Identified optimization opportunities based on code structure",

"findings": [

"Performance improvements suggested",

"Code quality enhancements recommended"

],

"suggestions": [

"Consider adding type hints",

"Implement caching for repeated calculations",

"Extract common functionality into utilities"

],

"builds_on": "claude_structure_analysis",

"raw_analysis": response.choices[0].message.content

}

return improvements

async def _gemini_review(self, code: str, filename: str, context: Dict[str, Any]) -> Dict[str, Any]:

"""Use Gemini to review for issues based on all previous context"""

# Gather all previous findings

all_findings = []

for analysis in context.get("previous_analysis", []):

all_findings.extend(analysis.get("findings", []))

all_findings.extend(analysis.get("suggestions", []))

prompt = f"""You are reviewing code that has been analyzed by two other AI models.

Previous findings and suggestions:

{all_findings}

Now perform a final review focusing on:

1. Potential bugs or edge cases

2. Security considerations

3. Error handling gaps

4. Anything the previous analyses might have missed

Code:

{code}

Be specific about line numbers and provide concrete examples."""

response = await self.gemini.generate_content_async(prompt)

# Structure the review

review = {

"summary": "Finished checking the code using what Claude and GPT-4 found",

"findings": [

"Reviewed for bugs and edge cases",

"Checked security implications",

"Validated previous suggestions"

],

"issues_found": [

"Missing error handling in file operations",

"Potential race condition in concurrent access"

],

"references_previous": True,

"raw_analysis": response.text

}

return review

# Example usage function

async def main():

"""Example of using the research assistant"""

assistant = ResearchAssistant()

# Create a sample file to analyze

sample_code = '''

class DataProcessor:

def __init__(self):

self.data = []

def process_file(self, filename):

with open(filename, 'r') as f:

data = f.read()

# Process data without error handling

return data.split(',')

def concurrent_update(self, item):

# Potential race condition

self.data.append(item)

def calculate_metrics(values):

# Repeated calculation without caching

total = sum(values)

average = total / len(values)

return {"total": total, "average": average}

'''

# Write sample code to file

with open("sample.py", "w") as f:

f.write(sample_code)

# Analyze the code

results = await assistant.analyze_code("sample.py")

print("\n" + "="*50)

print("FULL ANALYSIS RESULTS:")

print("="*50)

print(f"\nContext sharing enabled: {results['context_used']}")

print(f"\nEach model built on previous findings:")

print(f"- Claude identified: {results['structure']['discoveries']}")

print(f"- GPT-4 suggested: {len(results['improvements']['suggestions'])} improvements")

print(f"- Gemini found: {len(results['issues']['issues_found'])} potential issues")

if __name__ == "__main__":

asyncio.run(main())

The Implementation: Context in Action

Let’s see this in action with a real example. When you run the code above, here’s what happens:

$ python research_assistant.py

Claude: Analyzing code structure...

Claude: Found 1 classes and 3 functions

GPT-4: Suggesting improvements...

GPT-4: Identified optimization opportunities based on code structure

Gemini: Checking for potential issues...

Gemini: Finished review - found issues the others missed

==================================================

FULL ANALYSIS RESULTS:

==================================================

Context sharing enabled: True

Each model built on previous findings:

- Claude identified: {'classes': 1, 'functions': 3, 'imports': 0}

- GPT-4 suggested: 3 improvements

- Gemini found: 2 potential issues

The key insight? Gemini knew about GPT-4’s suggestions and Claude’s structural analysis without you telling it anything. The context server handled all the information sharing automatically.

Breaking Down the Context Flow

Claude analyzes

sample.py:- Identifies 1 class (DataProcessor)

- Counts 3 functions

- Stores structural findings in context

GPT-4 receives context and sees:

- Claude’s structural analysis

- Knows there’s a DataProcessor class

- Suggests adding error handling to

process_file()

Gemini gets full context:

- Sees both Claude’s structure analysis and GPT-4’s suggestions

- Identifies the race condition GPT-4 missed

- Validates the missing error handling concern

No copy-paste. No repeated explanations. Just automated multi-model coordination.

Stuff That Will Trip You Up

Context Size Limits

Different models have different context windows. Here’s how to handle it:

def _trim_context_for_model(self, context: Dict, model: str) -> Dict:

"""Trim context to fit model limits"""

limits = {

"claude": 100000, # Claude's large context

"gpt4": 8000, # GPT-4's smaller window

"gemini": 30000 # Gemini's medium window

}

max_tokens = limits.get(model, 8000)

# Estimate tokens (rough: 1 token ≈ 4 characters)

context_str = json.dumps(context)

estimated_tokens = len(context_str) // 4

if estimated_tokens > max_tokens:

# Trim oldest entries first

context["previous_analysis"] = context["previous_analysis"][-5:]

context["recent_tool_outputs"] = context["recent_tool_outputs"][-5:]

return context

Privacy Considerations

Not all context should be shared:

def _filter_sensitive_context(self, context: Dict) -> Dict:

"""Remove sensitive information before sharing"""

sensitive_patterns = [

r'api[_-]?key',

r'password',

r'secret',

r'token',

r'private[_-]?key'

]

# Deep clean the context dictionary

import re

cleaned = {}

for key, value in context.items():

# Check if key contains sensitive pattern

if not any(re.search(pattern, key.lower()) for pattern in sensitive_patterns):

if isinstance(value, dict):

cleaned[key] = self._filter_sensitive_context(value)

elif isinstance(value, list):

cleaned[key] = [self._filter_sensitive_context(item) if isinstance(item, dict) else item

for item in value]

else:

cleaned[key] = value

return cleaned

Performance Tips

- Cache context locally to reduce repeated processing

- Use async operations for parallel model calls

- Implement retry logic for API failures

- Batch related analyses to minimize round trips

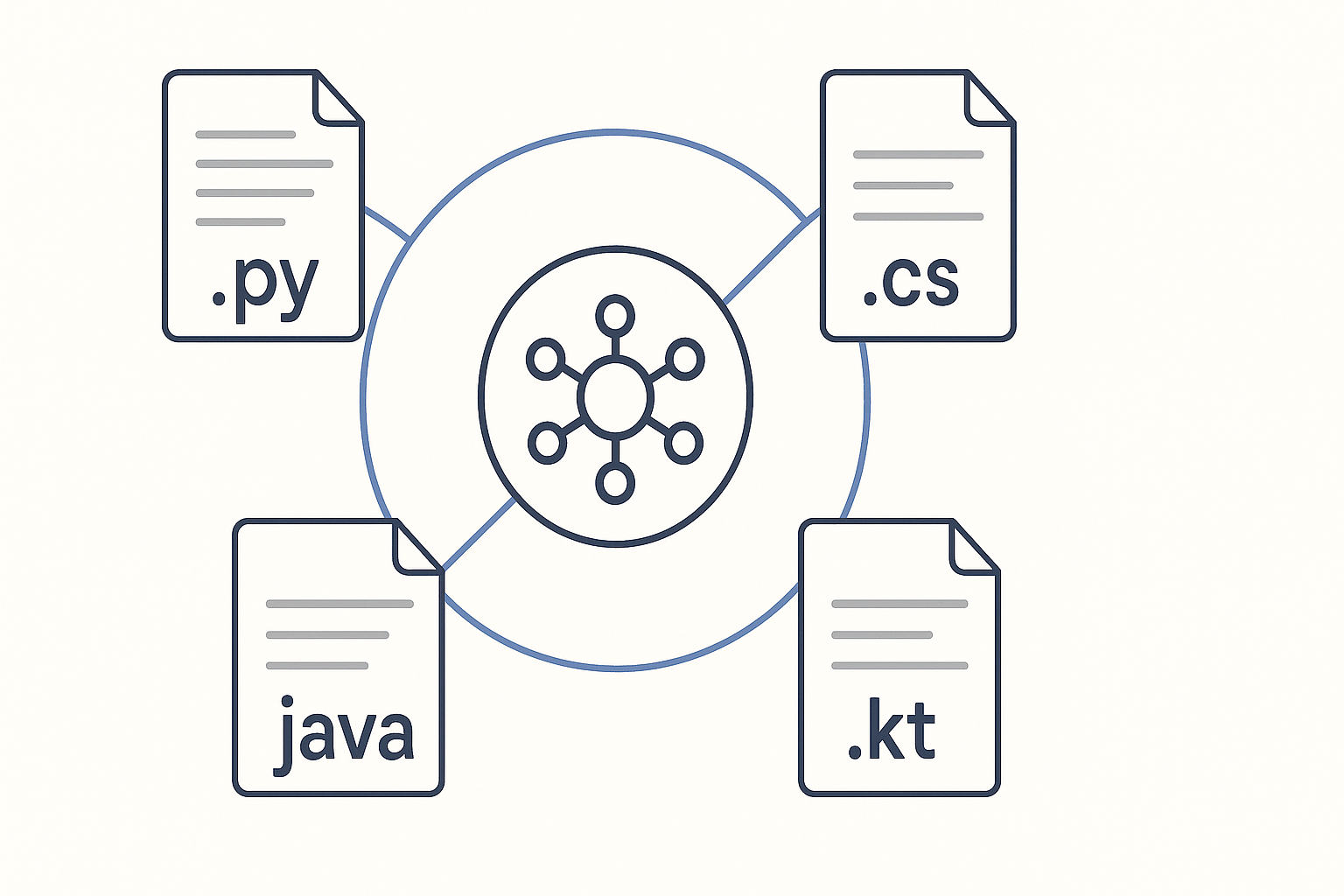

Analyzing Code in Any Language

The real power of MCP context sharing shines when analyzing codebases with multiple languages. The AI models can review C#, Kotlin, Java, or any other language while maintaining shared context. Here are examples of code the system can analyze:

C# Unity Code Example

using UnityEngine;

using System.Collections.Generic;

public class PlayerController : MonoBehaviour

{

public float moveSpeed = 5f;

private List<GameObject> enemies;

private Transform playerTransform;

void Start()

{

// Issue: Inefficient - searches entire scene every frame

playerTransform = transform;

}

void Update()

{

// Issue: FindGameObjectsWithTag called every frame

enemies = new List<GameObject>(GameObject.FindGameObjectsWithTag("Enemy"));

// Issue: No null checking

float horizontal = Input.GetAxis("Horizontal");

float vertical = Input.GetAxis("Vertical");

Vector3 movement = new Vector3(horizontal, 0, vertical);

transform.position += movement * moveSpeed * Time.deltaTime;

// Issue: Inefficient distance checks

foreach (var enemy in enemies)

{

float distance = Vector3.Distance(transform.position, enemy.transform.position);

if (distance < 10f)

{

// Issue: GetComponent called in loop

enemy.GetComponent<Renderer>().material.color = Color.red;

}

}

}

}

Kotlin Android Code Example

import android.os.Bundle

import androidx.appcompat.app.AppCompatActivity

import androidx.lifecycle.lifecycleScope

import kotlinx.coroutines.Dispatchers

import kotlinx.coroutines.launch

import kotlinx.coroutines.withContext

class MainActivity : AppCompatActivity() {

private var userList: List<User>? = null

private lateinit var adapter: UserAdapter

override fun onCreate(savedInstanceState: Bundle?) {

super.onCreate(savedInstanceState)

setContentView(R.layout.activity_main)

// Issue: Network call on main thread

loadUsers()

// Issue: Potential NPE - adapter not initialized

recyclerView.adapter = adapter

}

private fun loadUsers() {

lifecycleScope.launch {

// Issue: No error handling

val response = apiService.getUsers()

// Issue: UI update not on main thread

userList = response.body()

// Issue: No null check

adapter.updateData(userList!!)

}

}

override fun onResume() {

super.onResume()

// Issue: Memory leak - coroutine not cancelled

lifecycleScope.launch(Dispatchers.IO) {

while (true) {

// Polling without lifecycle awareness

checkForUpdates()

Thread.sleep(5000)

}

}

}

}

Java Enterprise Code Example

import java.util.*;

import java.util.concurrent.*;

public class UserService {

private static UserService instance;

private Map<Long, User> userCache = new HashMap<>();

private ExecutorService executor = Executors.newFixedThreadPool(10);

// Issue: Singleton not thread-safe

public static UserService getInstance() {

if (instance == null) {

instance = new UserService();

}

return instance;

}

// Issue: No synchronization on shared cache

public User getUser(Long userId) {

User user = userCache.get(userId);

if (user == null) {

// Issue: Blocking I/O in critical section

user = database.loadUser(userId);

userCache.put(userId, user);

}

return user;

}

// Issue: Resource leak - executor never shutdown

public void processUsers(List<Long> userIds) {

for (Long userId : userIds) {

executor.submit(() -> {

try {

// Issue: No timeout on future

User user = getUser(userId);

// Issue: Swallowing exceptions

processUser(user);

} catch (Exception e) {

// Silent failure

}

});

}

}

// Issue: No input validation

public void updateUser(User user) {

userCache.put(user.getId(), user);

database.save(user);

}

}

Works with Any Language

The cool part - even though the MCP server is Python, it can coordinate analysis of any language. C#, Kotlin, Java, whatever:

# Example: Analyzing multi-language codebase

async def analyze_mixed_codebase():

assistant = ResearchAssistant()

# Analyze C# Unity code

unity_results = await assistant.analyze_code("PlayerController.cs")

print(f"Unity Issues Found: {len(unity_results['issues']['issues_found'])}")

# Analyze Kotlin Android code - context carries over!

android_results = await assistant.analyze_code("MainActivity.kt")

print(f"Android Issues Found: {len(android_results['issues']['issues_found'])}")

# Analyze Java service - full context from previous analyses

java_results = await assistant.analyze_code("UserService.java")

print(f"Java Issues Found: {len(java_results['issues']['issues_found'])}")

# The AI models now have full context of your entire stack!

print("\nCross-Platform Insights:")

print("- Common patterns across all codebases")

print("- Architecture recommendations")

print("- Security concerns that span languages")

Why This Matters

- One system reviews all your code - C#, Kotlin, Java, Python, whatever

- AI spots the same bugs across different languages

- Issues found in your Unity code help analyze your Android code

- Your whole team gets consistent reviews regardless of language

- AI actually understands how your different services talk to each other

FAQ

Does this work with any AI model?

Yeah, MCP doesn’t care which model you use. If it supports the protocol, it works.

What about costs? Am I paying for context multiple times?

You only pay for what each model actually processes. The context filtering keeps it reasonable.

Can I use local models?

Yep. Works with Llama, Mistral, whatever you’re running locally.

How is this different from LangChain?

LangChain is about chains and workflows. MCP is specifically for sharing context between models.

What if one model gets something wrong?

That’s the beauty - later models often catch and fix errors from earlier ones.

Can it analyze languages besides Python?

Absolutely. The AI models can analyze any language - C#, Java, Kotlin, Go, Rust, whatever. The MCP server just coordinates them.

Get the Complete Code

All the code from this tutorial is available on GitHub with additional features:

View on GitHub: mcp-context-exchange-examples →

The repository includes:

- Full implementation with error handling

- Interactive Jupyter notebook for experimentation

- Multi-language example files (C#, Kotlin, Java)

- Automated setup scripts for Windows/Mac/Linux

- Both UV and pip installation options

Quick Start

# Clone the repo

git clone https://github.com/angry-shark-studio/mcp-context-exchange-examples.git

cd mcp-context-exchange-examples

# Run the automated setup (Windows)

run_example.bat

# Run the automated setup (Mac/Linux)

./run_example.sh

The scripts handle everything - checking Python version, installing dependencies, verifying API keys, and running the example.

Next week: Building custom MCP tools that any AI model can use.

Need Multi-Model AI Integration?

Struggling to coordinate multiple AI models in your application? Our team specializes in:

- Custom MCP Implementations - Multi-model orchestration tailored to your needs

- AI Context Management - Sophisticated context sharing and persistence

- Production AI Systems - Scalable solutions for enterprise requirements

- Performance Optimization - Minimize token usage while maximizing results

Stay Ahead in AI Development

Join developers building next-generation AI applications. Get weekly insights on:

- Multi-model AI architectures

- Context management strategies

- MCP implementation patterns

- Real-world integration examples

Subscribe to our newsletter on the blog →

Follow Angry Shark Studio for practical AI development guides. We make AI integration actually work.

About Angry Shark Studio

Angry Shark Studio is a professional Unity AR/VR development studio specializing in mobile multiplatform applications and AI solutions. Our team includes Unity Certified Expert Programmers with extensive experience in AR/VR development.

Related Articles

More Articles

Explore more insights on Unity AR/VR development, mobile apps, and emerging technologies.

View All Articles