Difficulty Level: Intermediate

Three weeks. That’s how long it took me to integrate the same database query feature across OpenAI, Google’s Gemini, and Anthropic’s Claude. Same functionality, three completely different implementations, endless debugging sessions.

The worst part? When OpenAI updated their function calling API two months later, I had to rewrite everything. Again.

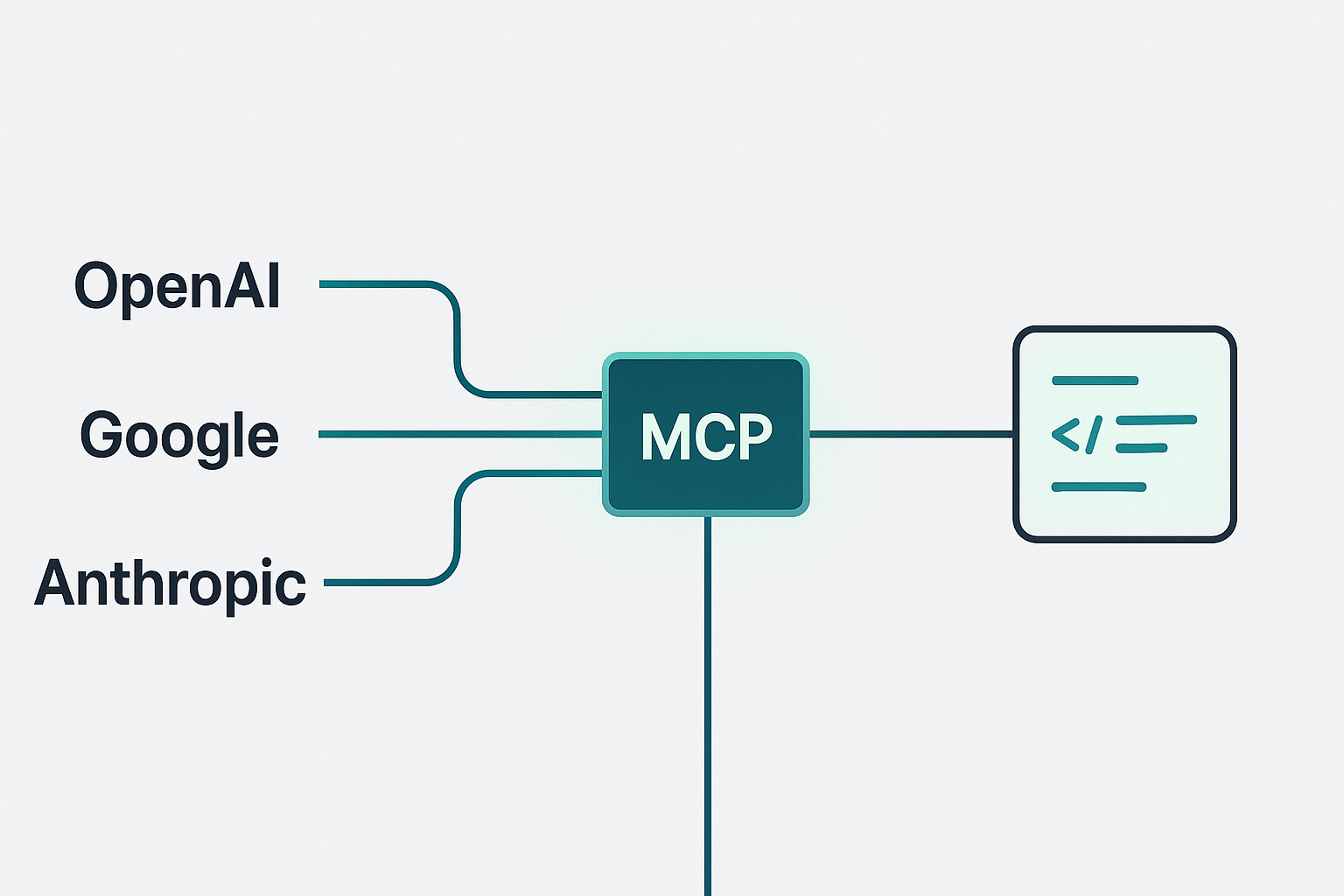

This is the hidden cost of proprietary AI tooling—the time sink that’s killing developer productivity across the industry. Model Context Protocol (MCP) changes this equation entirely by standardizing how AI models interact with external tools and data sources.

The Bottom Line: Developers waste 70-80% of their integration time dealing with proprietary differences rather than building features. MCP eliminates this overhead by providing one standard that works everywhere.

What You’ll Learn

- Why proprietary AI tools create integration nightmares

- Clear comparison: MCP simplicity vs proprietary complexity

- Real benefits: time saved, costs reduced, headaches eliminated

- When standardization makes sense for your projects

- What’s coming next in the MCP series

The Hidden Costs of Proprietary AI Tools

Let’s look at what happens when you need the same feature across different AI platforms. Here’s a simplified view of implementing a database query tool:

The Proprietary Maze

OpenAI’s Approach:

# Complex nested schema definition

tools = [{

"type": "function",

"function": {

"name": "query_database",

"parameters": {

"type": "object",

"properties": {

"query": {"type": "string"},

"database": {"type": "string"}

}

}

}

}]

# Plus 30+ more lines for handling responses...

Google’s Different World:

# Completely different structure

query_db = genai.FunctionDeclaration(

name="queryDatabase", # Different naming!

parameters={

"properties": {

"sqlQuery": {...}, # Different parameter names!

"targetDatabase": {...}

}

}

)

# Plus another 30+ lines with different response handling...

Anthropic’s Unique Take:

# Yet another format

tools = [{

"name": "query_database",

"input_schema": {

"properties": {

"sql": {...}, # More different names!

"db_name": {...}

}

}

}]

# Plus more platform-specific response code...

The Real Problems

Each platform requires:

- Different schema formats - Learn three ways to define the same tool

- Different parameter names -

queryvssqlQueryvssql - Different response handling - Unique patterns for each platform

- Separate error handling - Platform-specific error codes and formats

- Individual maintenance - Update your code three times for one feature

Result: You write the same logic three times, maintain three codebases, and pray nothing changes.

MCP: The Power of Standardization

Here’s what the same tool looks like with MCP:

# MCP - One simple definition for all platforms

def query_database(query: str, database: str):

"""Query the database with SQL"""

# Your actual logic here

return execute_query(query, database)

# That's it. This single definition works with:

# - OpenAI GPT models

# - Google Gemini

# - Anthropic Claude

# - Any future MCP-compatible AI

The Transformation

With MCP standardization:

- Write once - Single tool definition for all platforms

- Consistent naming - Same parameters everywhere

- Unified handling - One way to process responses

- Future-proof - New AI models just work

- 10x less code - Focus on features, not integration

The magic? MCP handles all the platform-specific translation behind the scenes. You write your tool once, and it works everywhere.

Note: The actual implementation details of MCP will be covered in later posts in this series. For now, focus on understanding the benefits of standardization.

A Clear Comparison: MCP vs Proprietary Solutions

Let’s compare the key differences between MCP and proprietary approaches:

Feature Comparison

| Feature | MCP | OpenAI | Anthropic | |

|---|---|---|---|---|

| Standardized Schema | Yes (JSON-RPC) | No (Custom) | No (Custom) | No (Custom) |

| Works Offline | Yes | No | No | No |

| Multiple Transports | Yes (HTTP/WebSocket/stdio) | HTTPS only | HTTPS only | HTTPS only |

| Vendor Lock-in | None | High | High | High |

| Learning Curve | Learn once | Per platform | Per platform | Per platform |

| Tool Portability | Universal | Platform-specific | Platform-specific | Platform-specific |

| Update Impact | Minimal | Breaking changes | Breaking changes | Breaking changes |

| Documentation | Single standard | Fragmented | Fragmented | Fragmented |

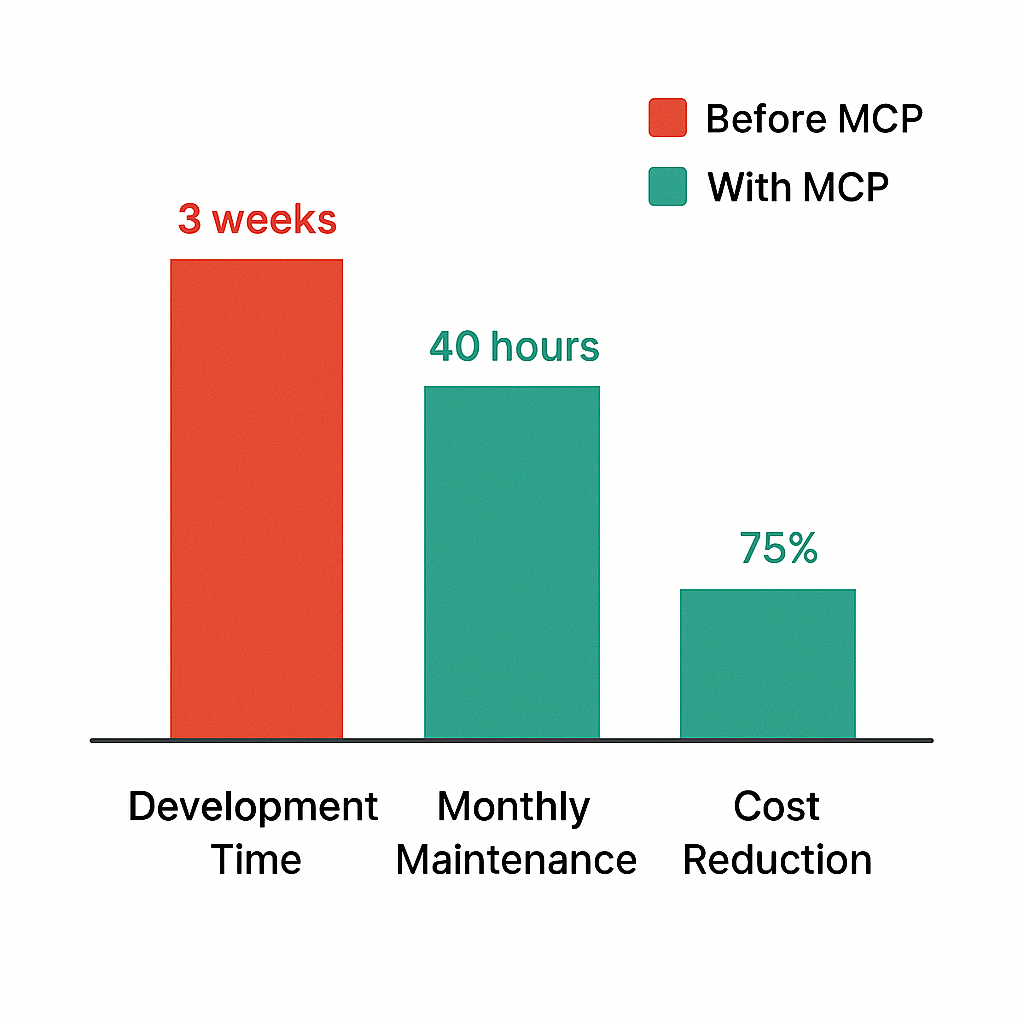

The Numbers Don’t Lie

Real teams report these improvements after switching to MCP:

Development Time:

- Building for 3 platforms: 3 weeks → 3 days

- Time saved: 75-80%

Maintenance Costs:

- Monthly updates: 40 hours → 10 hours

- Cost reduction: 75%

New Platform Support:

- Adding a new AI model: 2 weeks → 0 hours

- It just works!

Real Impact: Before and After

Before MCP: The Integration Nightmare

Imagine you’re building an AI assistant that needs to work with multiple platforms. Your codebase looks like this:

project/

├── openai_integration/

│ ├── tool_definitions.py # 500+ lines

│ ├── response_handler.py # 300+ lines

│ └── error_handling.py # 200+ lines

├── google_integration/

│ ├── tool_schemas.py # 450+ lines

│ ├── gemini_handler.py # 350+ lines

│ └── exceptions.py # 180+ lines

├── anthropic_integration/

│ ├── claude_tools.py # 480+ lines

│ ├── message_handler.py # 320+ lines

│ └── error_utils.py # 190+ lines

└── platform_manager.py # 1000+ lines of glue code

Total: 4,000+ lines of integration code before you write a single feature.

After MCP: Simple and Clean

project/

├── mcp_tools.py # 100 lines - all your tool definitions

├── server.py # 50 lines - MCP server setup

└── main.py # Your actual application logic

Total: 150 lines of integration code. Done.

The difference? You spend time building features, not fighting with integrations.

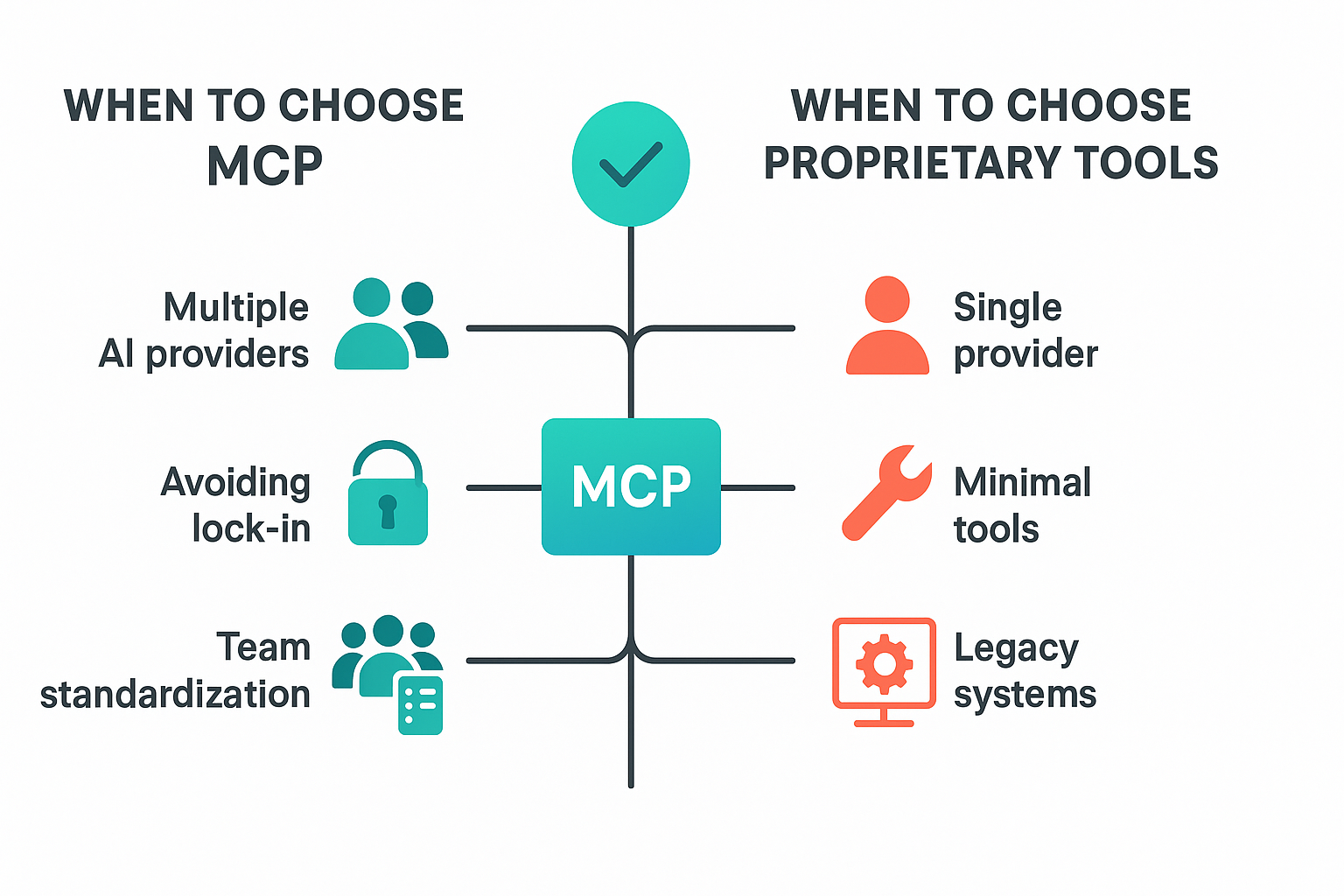

When Should You Consider MCP?

MCP isn’t always the answer. Here’s when it makes sense:

Perfect for MCP:

Building multi-AI applications - Need to support multiple AI providers

Avoiding vendor lock-in - Want flexibility to switch AI models

Standardizing across teams - Multiple teams building AI integrations

Local/offline requirements - Running AI tools without internet

Long-term projects - Building for the future, not just today

Stick with Proprietary When:

Single AI provider forever - Truly committed to one platform

Minimal tool usage - Just 1-2 simple tools

Legacy constraints - Can’t modify existing infrastructure

Proof of concept - Just testing ideas quickly

The Business Case for Standardization

Beyond the technical benefits, MCP delivers real business value:

Reduced Costs: 75% less development and maintenance time

Faster Innovation: Add features instead of managing integrations

Team Scalability: New developers learn one standard, not three

Future Flexibility: Switch AI providers based on performance, not lock-in

Risk Mitigation: No single vendor dependency

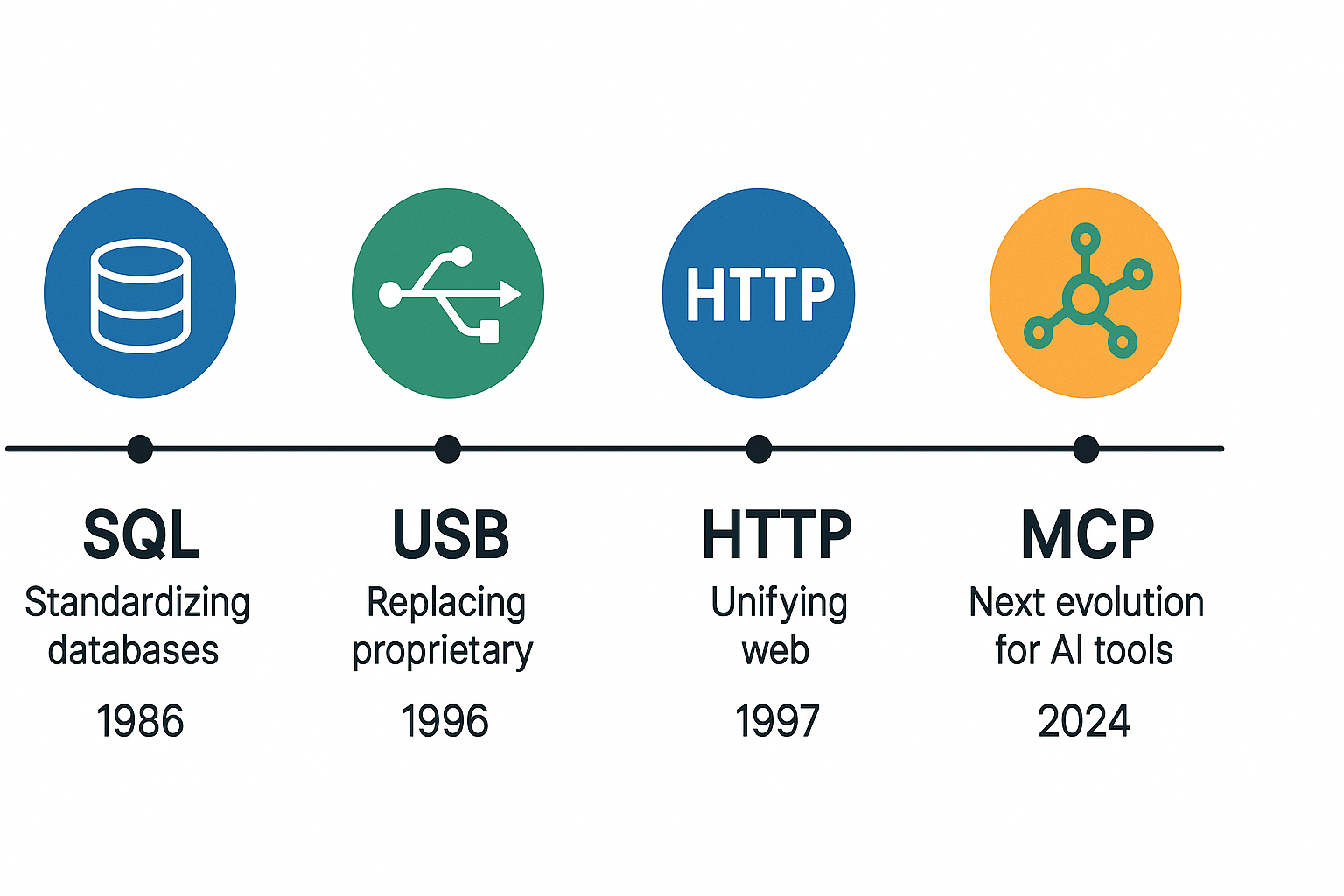

Learning from History: Why Standards Win

Standardization always wins in technology. We’ve seen this pattern before:

- USB replaced dozens of proprietary connectors

- HTTP unified web communication

- SQL standardized database queries

- REST simplified API design

MCP is following the same path for AI tool integration. Early adopters of these standards gained competitive advantages while others played catch-up.

By choosing MCP now, you’re:

- Future-proofing against API changes

- Enabling flexibility to choose AI models based on merit

- Building portable applications that work everywhere

- Reducing technical debt before it accumulates

Frequently Asked Questions

What exactly is MCP compared to existing AI tool systems?

MCP (Model Context Protocol) is like a universal adapter for AI tools. While OpenAI, Google, and Anthropic each have their own proprietary ways to connect tools to their AI models, MCP provides one standard way that works with all of them. Think of it as the USB-C of AI integrations—one connector that works everywhere.

Do I need to learn MCP if I’m only using one AI provider?

Not necessarily. If you’re committed to a single AI provider and have simple tool needs, their proprietary system might be sufficient. However, MCP is worth considering even for single-provider scenarios because it’s often simpler to implement, provides better local testing capabilities, and protects you from future API changes.

How much time does it really save compared to proprietary tools?

Based on real implementations, teams report 75-80% time savings. A typical multi-platform integration that takes 3 weeks with proprietary tools can be done in 3-4 days with MCP. More importantly, ongoing maintenance drops from 40 hours/month to about 10 hours/month because you’re maintaining one codebase instead of three.

What’s Next in This Series?

This is article 3 of 24 in our complete MCP series. We’ve explored what MCP is, why it matters strategically, and now how it compares to proprietary approaches.

Coming up next: “Breaking Down AI Silos: How MCP Creates Interoperability” - where we’ll explore how MCP enables different AI systems to work together smoothly, creating possibilities that weren’t feasible with proprietary approaches.

Your MCP Learning Path:

- ✓ “What is MCP?” - The basics

- ✓ “Why Anthropic Chose MCP” - Strategic context

- ✓ “Why Standardization Matters” - You are here

- → “Breaking Down AI Silos” - Coming next

Want to dive deeper? The technical implementation details come later in the series. Posts 8-12 will cover hands-on MCP development, including a complete guide to building MCP servers.

Have questions? Check out our complete blog or reach out to us if you’re considering MCP for your projects.

The Bottom Line: Every day you spend wrestling with proprietary AI integrations is a day not spent building features. MCP changes that equation. One standard, endless possibilities.

For official MCP documentation and resources, visit the MCP GitHub repository.

About Angry Shark Studio

Angry Shark Studio is a professional Unity AR/VR development studio specializing in mobile multiplatform applications and AI solutions. Our team includes Unity Certified Expert Programmers with extensive experience in AR/VR development.

Related Articles

More Articles

Explore more insights on Unity AR/VR development, mobile apps, and emerging technologies.

View All Articles