Your team needs to connect a proprietary database to Claude. You check the MCP specification. No built-in database connector.

Do you wait for the spec to add it? Build something non-standard? Give up on MCP entirely?

MCP’s answer: extend the protocol without breaking it.

Prerequisites

New to MCP? Start with these foundational posts:

- What is the Model Context Protocol? - Understanding MCP basics

- Breaking Down AI Silos - Why standards matter

- Sessions, States, and Continuity - Implementation details

This post builds on MCP fundamentals and assumes basic familiarity with protocol design and Python.

The Problem with Pure Standards

Standards that try to do everything end up doing nothing well.

SOAP (Simple Object Access Protocol) promised to solve enterprise integration. Instead, it created a nightmare of complexity. A simple API call required understanding WSDL, XML namespaces, and dozens of WS-* specifications.

Here’s what a basic SOAP request looks like:

<?xml version="1.0"?>

<soap:Envelope

xmlns:soap="http://www.w3.org/2003/05/soap-envelope"

xmlns:wsa="http://www.w3.org/2005/08/addressing"

xmlns:wsse="http://docs.oasis-open.org/wss/2004/01/oasis-200401-wss-wssecurity-secext-1.0.xsd">

<soap:Header>

<wsa:Action>http://example.com/GetUserDetails</wsa:Action>

<wsa:To>http://example.com/users</wsa:To>

<wsse:Security>

<wsse:UsernameToken>

<wsse:Username>user</wsse:Username>

<wsse:Password Type="...">password</wsse:Password>

</wsse:UsernameToken>

</wsse:Security>

</soap:Header>

<soap:Body>

<GetUserDetails xmlns="http://example.com/schema">

<UserId>12345</UserId>

</GetUserDetails>

</soap:Body>

</soap:Envelope>

Just to get user details. The standard specified everything: how to handle errors, security, transactions, routing, reliable messaging. The result? Nobody could implement it completely, and everyone implemented it differently.

CORBA had the same problem. It tried to standardize distributed computing across languages and platforms. The specification was thousands of pages long. Companies spent years building CORBA implementations that were technically compliant but practically incompatible.

When standards over-specify, developers face a choice: implement everything (impossible), implement some of it (incompatible), or ignore the standard entirely (why have it?).

The rigid approach also stifles innovation. Need a feature the standard doesn’t have? Too bad. Either wait for the standards committee to add it (takes years), or build something non-compliant and lose interoperability.

The Problem with No Standards

The opposite approach is equally broken.

Before REST became the de facto standard for web APIs, every company invented their own RPC mechanism. Same functionality, different implementations:

// Company A's API - positional parameters, abbreviated field names

sendData("user", {nm: "John", ag: 25})

// Company B's API - verbose structure, explicit type field

api_push({

"type": "user",

"name": "John",

"age": 25

})

// Company C's API - custom serialization format

USER::CREATE<<John|25>>

// Company D's API - array-based encoding

post("create", ["user", "John", "25"])

Each company thought their approach was obviously correct. None of them were wrong - just different. The problem wasn’t bad design, it was lack of coordination.

Integration becomes a nightmare. Want to build a tool that works with multiple services? You’re writing custom adapters for each one. A change to one API means updating your adapter. Scale this across hundreds of services and you have an unmaintainable mess.

This happened in the AI space before MCP. OpenAI had function calling. Anthropic had tool use. Google had extensions. Each platform’s tools were proprietary and incompatible.

Building an AI agent that could use tools meant picking one platform and staying there. Want to compare GPT-4 vs Claude on the same task? Rebuild all your tools. Want to test an open-source model? Start from scratch.

Without standards, every integration is bespoke. Every update is painful. Every new platform is a rewrite.

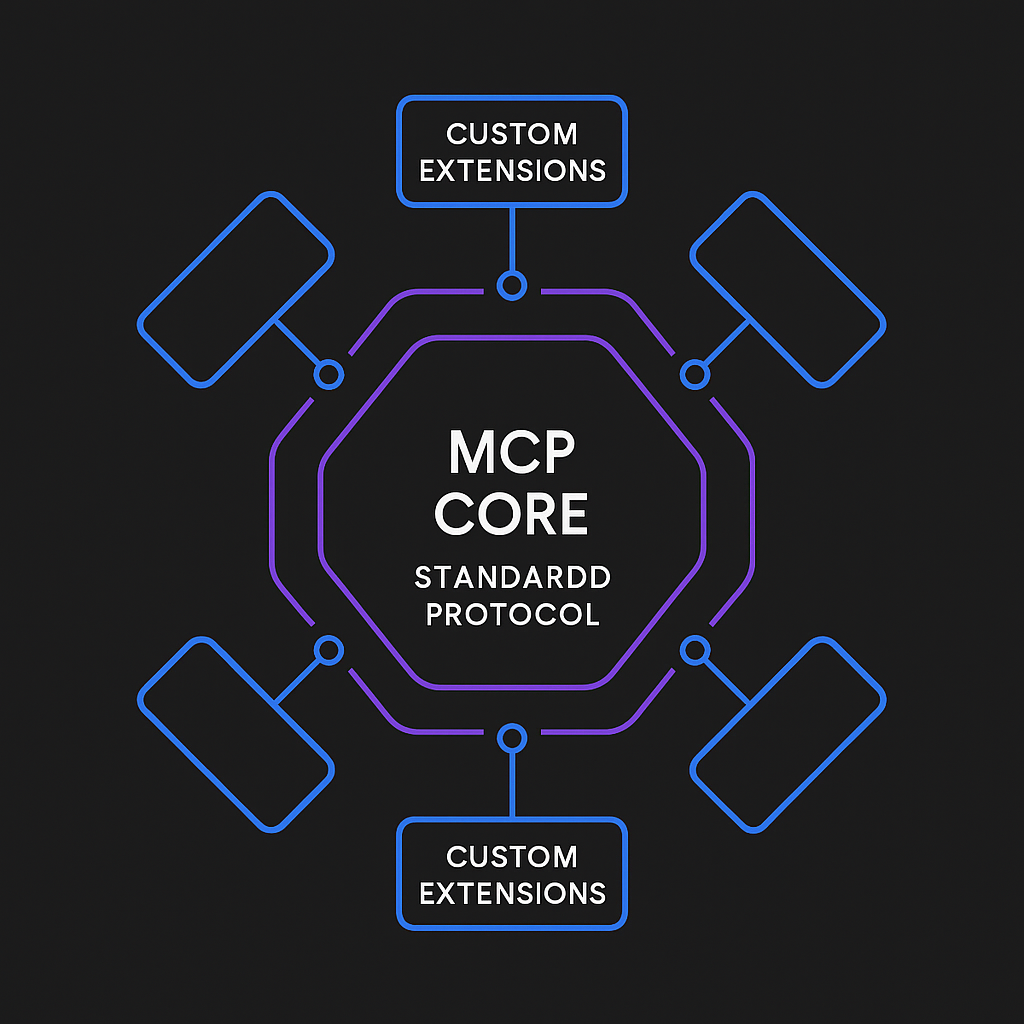

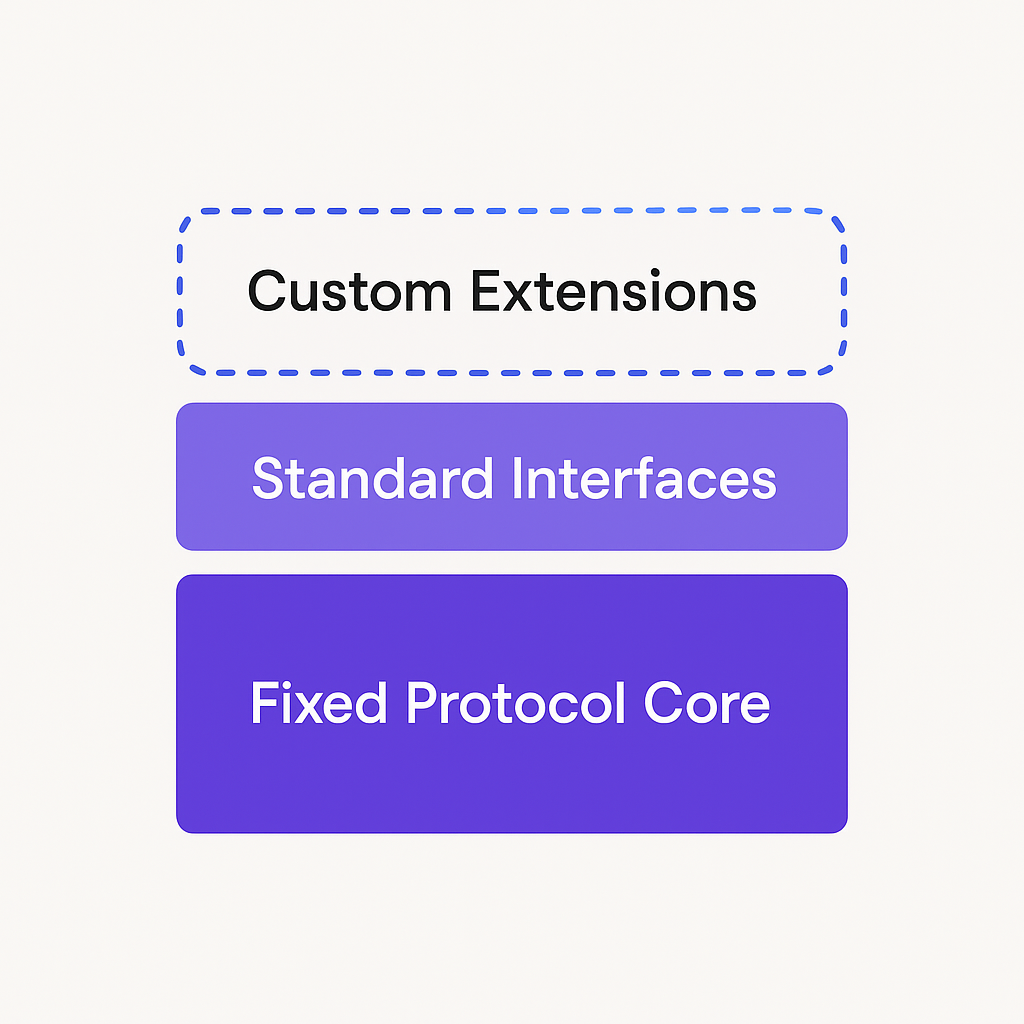

What MCP Actually Standardizes

MCP doesn’t try to solve every problem. It standardizes the minimum necessary for interoperability.

Fixed Message Format

MCP uses JSON-RPC 2.0. This is not optional. Every MCP message must follow this structure:

# Request format - always the same structure

{

"jsonrpc": "2.0",

"method": "tools/list",

"id": 1

}

# Response format - standardized

{

"jsonrpc": "2.0",

"result": {

"tools": [...]

},

"id": 1

}

# Error format - consistent across all implementations

{

"jsonrpc": "2.0",

"error": {

"code": -32601,

"message": "Method not found"

},

"id": 1

}

This consistency means any MCP client can parse any MCP server’s messages. The client doesn’t need to know implementation details - it knows the structure.

Core Method Names

MCP defines a small set of standard methods that every server should support:

# Core methods - standardized across all MCP servers

"tools/list" # List available tools

"tools/call" # Execute a specific tool

"resources/list" # List available resources

"resources/read" # Read a resource

"prompts/list" # List available prompts

"prompts/get" # Get a specific prompt

These method names are fixed. A server can’t rename tools/list to list_tools and claim MCP compliance. This rigidity enables client compatibility.

Session Lifecycle

MCP specifies how connections start and end:

# Initialize connection - required first step

{

"jsonrpc": "2.0",

"method": "initialize",

"params": {

"protocolVersion": "0.1.0",

"capabilities": {

"tools": {},

"resources": {}

},

"clientInfo": {

"name": "example-client",

"version": "1.0.0"

}

},

"id": 1

}

# Server responds with its capabilities

{

"jsonrpc": "2.0",

"result": {

"protocolVersion": "0.1.0",

"capabilities": {

"tools": {},

"resources": {"subscribe": true}

},

"serverInfo": {

"name": "example-server",

"version": "1.0.0"

}

},

"id": 1

}

This handshake is mandatory. It allows capability negotiation before actual work begins. Clients know what features are available. Servers know what the client supports.

Standard Error Codes

MCP uses JSON-RPC error codes with specific meanings:

# Standard error codes - clients can handle these uniformly

-32700 # Parse error - invalid JSON

-32600 # Invalid request - missing required fields

-32601 # Method not found - unsupported method name

-32602 # Invalid params - wrong parameter structure

-32603 # Internal error - server-side failure

This standardization means clients can handle errors consistently across all servers. An error code -32601 always means “method not found” regardless of which server sent it.

That’s what MCP standardizes. Not much, right? That’s intentional.

Where MCP Lets You Customize

Everything not explicitly standardized is open for extension.

Custom Tool Definitions

MCP standardizes how to call tools, not what tools exist. Your server can define any tools your use case needs:

from mcp.server.fastmcp import FastMCP

mcp = FastMCP("database-server")

# Custom tool - MCP doesn't care what this does

@mcp.tool()

async def query_legacy_oracle(

sql: str,

timeout: int = 30

) -> dict[str, any]:

"""

Query our legacy Oracle database that's been running since 2003.

Args:

sql: The query to execute

timeout: Query timeout in seconds

"""

# Your implementation here

async with get_oracle_connection() as conn:

result = await conn.fetch(sql)

return {

"rows": result,

"count": len(result),

"execution_time": result.execution_time

}

MCP specifies that tools must be declared via tools/list and called via tools/call. It doesn’t specify what the tool does, what parameters it accepts, or how it’s implemented.

This flexibility lets you integrate anything:

- Proprietary databases

- Internal APIs

- Custom business logic

- Legacy systems

- Specialized hardware

As long as your tool follows the calling convention, it’s valid MCP.

Custom Resource Types

Resources work the same way. MCP standardizes the access pattern, not the data:

@mcp.resource("company://metrics/quarterly-revenue")

async def get_quarterly_revenue() -> str:

"""

Internal financial metrics - only available on our network.

Returns JSON with revenue data from our ERP system.

"""

async with get_erp_connection() as erp:

data = await erp.query_revenue(quarter="current")

return json.dumps({

"revenue": data.total,

"breakdown": data.by_product,

"updated": data.timestamp

})

The resource URI scheme (company://) is custom. The data format is custom. The source system is custom. Only the access method (resources/read) is standardized.

Transport Layer Extensions

MCP supports multiple transport mechanisms. The specification defines stdio and HTTP/SSE, but you can implement your own:

from mcp.shared.session import BaseSession

from mcp.shared.message import Message

class SecureWebSocketTransport:

"""

Custom transport using WebSocket with your company's auth.

MCP doesn't specify this, but the protocol still works.

"""

def __init__(self, url: str, auth_token: str):

self.url = url

self.auth_token = auth_token

self.ws = None

async def connect(self):

"""Establish connection with custom authentication"""

self.ws = await websockets.connect(

self.url,

extra_headers={

"Authorization": f"Bearer {self.auth_token}",

"X-Company-ID": self.company_id

}

)

async def send(self, message: Message):

"""Send MCP message over WebSocket"""

await self.ws.send(message.to_json())

async def receive(self) -> Message:

"""Receive MCP message from WebSocket"""

data = await self.ws.recv()

return Message.from_json(data)

The MCP messages are standard. The transport mechanism is custom. Clients that understand your transport can connect. Standard clients can’t, but that’s fine - they use standard transports.

Configuration and Metadata

You can extend MCP messages with custom metadata:

# Standard MCP tool call with custom extensions

{

"jsonrpc": "2.0",

"method": "tools/call",

"params": {

"name": "query_database",

"arguments": {

"query": "SELECT * FROM users WHERE active = true"

},

# Custom extensions - namespaced to avoid conflicts

"_custom_acme_corp": {

"priority": "high",

"audit_log": true,

"timeout_override": 60,

"request_tracking_id": "req-2024-001"

}

},

"id": 42

}

The core MCP structure is unchanged. The custom fields are namespaced (prefixed with _custom_acme_corp) to avoid conflicts. Standard clients ignore these fields. Custom clients use them.

This pattern lets you add:

- Custom authentication metadata

- Performance tuning parameters

- Audit logging information

- Business-specific tracking

- Enterprise compliance data

As long as you don’t break the standard structure, you can extend freely.

Three Real Implementation Examples

Let’s see how this works in practice with complete implementations.

About These Examples:

- Example 1 (SQLite): Fully runnable - you can try this on your machine right now! All code is in docs/mcp_standardization_example/

- Example 2 (SSO): Illustrative pattern - shows enterprise auth integration (requires corporate infrastructure)

- Example 3 (Model Parameters): Illustrative pattern - shows custom model integration (requires fine-tuned models)

Example 1 uses tools you already have (Python + SQLite). Examples 2 and 3 demonstrate enterprise patterns but require infrastructure most readers won’t have. They’re included to show real-world MCP extension scenarios.

Example 1: Custom SQLite Database Tool (You Can Run This!)

Scenario: You want to let AI query a local database. This example uses SQLite (built into Python) so you can actually run it.

Setup (2 minutes):

# 1. Create project directory

mkdir mcp-database-example

cd mcp-database-example

# 2. Install MCP (only dependency needed!)

pip install mcp

# 3. Copy the complete server code below into database_server.py

# 4. Run it! (creates database automatically on first run)

python database_server.py

Complete Working Implementation:

from mcp.server.fastmcp import FastMCP

import sqlite3

import json

from typing import Any

mcp = FastMCP("simple-database-server")

# Initialize sample database with data

def setup_database():

"""Create sample database - you can customize this!"""

conn = sqlite3.connect('sample.db')

cursor = conn.cursor()

# Create users table

cursor.execute('''

CREATE TABLE IF NOT EXISTS users (

id INTEGER PRIMARY KEY,

name TEXT NOT NULL,

email TEXT NOT NULL,

city TEXT,

role TEXT

)

''')

# Insert sample data

sample_users = [

('Alice Johnson', 'alice@example.com', 'New York', 'engineer'),

('Bob Smith', 'bob@example.com', 'Los Angeles', 'designer'),

('Carol White', 'carol@example.com', 'Chicago', 'manager'),

('David Brown', 'david@example.com', 'New York', 'engineer'),

('Eve Davis', 'eve@example.com', 'Boston', 'analyst')

]

cursor.executemany('''

INSERT OR IGNORE INTO users (name, email, city, role)

VALUES (?, ?, ?, ?)

''', sample_users)

conn.commit()

conn.close()

print("Database created with sample data!")

# Setup database on first run

setup_database()

@mcp.tool()

async def query_database(

query: str,

max_rows: int = 100

) -> dict[str, Any]:

"""

Query the local SQLite database.

This is a simple example you can run on your machine!

All you need is Python and the MCP library.

Args:

query: SQL query to execute (SELECT only)

max_rows: Maximum rows to return

Returns:

Dictionary with query results and metadata

"""

# Safety: only allow SELECT queries

if not query.strip().upper().startswith('SELECT'):

return {

"error": "Only SELECT queries allowed",

"hint": "Try: SELECT * FROM users WHERE city = 'New York'"

}

try:

conn = sqlite3.connect('sample.db')

cursor = conn.cursor()

# Execute query with row limit

cursor.execute(f"{query} LIMIT {max_rows}")

# Get column names and results

columns = [desc[0] for desc in cursor.description]

rows = cursor.fetchall()

# Format as dictionaries for easy reading

results = [dict(zip(columns, row)) for row in rows]

conn.close()

return {

"success": True,

"data": results,

"count": len(results),

"truncated": len(results) >= max_rows,

"columns": columns

}

except sqlite3.Error as e:

return {

"success": False,

"error": str(e),

"hint": "Check your SQL syntax"

}

@mcp.tool()

async def list_tables() -> dict[str, Any]:

"""List all tables in the database"""

try:

conn = sqlite3.connect('sample.db')

cursor = conn.cursor()

cursor.execute("""

SELECT name FROM sqlite_master

WHERE type='table'

""")

tables = [row[0] for row in cursor.fetchall()]

conn.close()

return {

"success": True,

"tables": tables

}

except sqlite3.Error as e:

return {

"success": False,

"error": str(e)

}

# Run the server

if __name__ == "__main__":

print("Starting MCP database server...")

print("Available tables:", list_tables())

mcp.run()

Try it yourself:

# Start the server in one terminal

python database_server.py

# In another terminal or Python script, test with MCP client

from mcp.client import Client

async def test_database():

client = Client()

await client.connect("stdio", command="python database_server.py")

# List available tables

tables = await client.call_tool("list_tables", {})

print("Tables:", tables)

# Query the database

result = await client.call_tool(

"query_database",

{"query": "SELECT * FROM users WHERE city = 'New York'"}

)

print("Results:", json.dumps(result, indent=2))

# Output:

# {

# "success": true,

# "data": [

# {"id": 1, "name": "Alice Johnson", "email": "alice@example.com", ...},

# {"id": 4, "name": "David Brown", "email": "david@example.com", ...}

# ],

# "count": 2,

# "truncated": false

# }

# Run it

import asyncio

asyncio.run(test_database())

What’s Standard: The tool declaration (@mcp.tool()), calling convention (tools/call), and JSON response format.

What’s Custom: Database connection, query handling, safety checks, result formatting, and the additional list_tables tool.

Why It Works: AI clients don’t need to know about SQLite. They just call query_database with SQL. Your server handles all the database complexity.

Real-World Usage: This pattern works for any database (PostgreSQL, MySQL, MongoDB). Replace SQLite connection with your database client, keep the MCP tool structure the same. That’s the power of MCP extensibility!

Note: The complete

database_server.pycode is shown above. It creates the database automatically on first run, so you can start immediately with just this one file!

Example 2: Enterprise SSO Authentication

Scenario: Your company requires all systems to use corporate SSO (SAML-based). Standard MCP transports don’t support this. You need MCP to work within corporate security policies.

Solution: Extend the transport layer with SSO integration.

from mcp.shared.session import BaseSession

from mcp.shared.message import Message

import httpx

import jwt

from datetime import datetime, timedelta

from typing import Optional

class EnterpriseAuthTransport:

"""

Custom MCP transport with corporate SSO integration.

Handles SAML token exchange, token refresh, and secure session management.

"""

def __init__(

self,

server_url: str,

sso_endpoint: str,

client_cert: str,

client_key: str

):

self.server_url = server_url

self.sso_endpoint = sso_endpoint

self.client_cert = client_cert

self.client_key = client_key

self.access_token: Optional[str] = None

self.token_expiry: Optional[datetime] = None

# HTTP client with corporate certificate

self.http = httpx.AsyncClient(

cert=(client_cert, client_key),

verify="/etc/ssl/corporate-ca.pem" # Corporate CA bundle

)

async def authenticate(self) -> str:

"""

Exchange credentials for access token via corporate SSO.

This is completely custom - not part of MCP standard.

"""

# Step 1: Get SAML assertion from SSO

saml_response = await self.http.post(

f"{self.sso_endpoint}/saml/login",

data={

"client_id": "mcp-client",

"client_secret": self._get_client_secret(),

"grant_type": "client_credentials"

}

)

saml_assertion = saml_response.json()["assertion"]

# Step 2: Exchange SAML for access token

token_response = await self.http.post(

f"{self.sso_endpoint}/token",

data={

"assertion": saml_assertion,

"scope": "mcp:read mcp:write"

}

)

token_data = token_response.json()

self.access_token = token_data["access_token"]

# Parse expiry from JWT

decoded = jwt.decode(

self.access_token,

options={"verify_signature": False}

)

self.token_expiry = datetime.fromtimestamp(decoded["exp"])

return self.access_token

async def ensure_valid_token(self):

"""Refresh token if expired or about to expire"""

if not self.access_token or not self.token_expiry:

await self.authenticate()

return

# Refresh if less than 5 minutes remaining

if datetime.now() + timedelta(minutes=5) > self.token_expiry:

await self.authenticate()

async def send_mcp_message(self, message: Message) -> Message:

"""

Send MCP message with corporate authentication.

The message structure is standard MCP.

The transport and auth are custom.

"""

await self.ensure_valid_token()

# Send standard MCP message with custom auth header

response = await self.http.post(

f"{self.server_url}/mcp",

json=message.to_dict(),

headers={

"Authorization": f"Bearer {self.access_token}",

"X-Corporate-Request-ID": self._generate_request_id(),

"X-Client-Certificate-SN": self._get_cert_serial_number()

}

)

return Message.from_dict(response.json())

def _get_client_secret(self) -> str:

"""Load client secret from corporate secrets manager"""

# Implementation specific to corporate infrastructure

pass

def _generate_request_id(self) -> str:

"""Generate tracking ID for audit logs"""

pass

def _get_cert_serial_number(self) -> str:

"""Extract serial number from client certificate"""

pass

# Usage

transport = EnterpriseAuthTransport(

server_url="https://mcp-server.company.com",

sso_endpoint="https://sso.company.com",

client_cert="/etc/certs/mcp-client.pem",

client_key="/etc/certs/mcp-client-key.pem"

)

# Now send standard MCP messages through custom transport

message = Message.create_request(

method="tools/list",

id=1

)

response = await transport.send_mcp_message(message)

# Response is standard MCP, transport handling is custom

What’s Standard: The MCP message structure, methods, and response format.

What’s Custom: SAML authentication, token management, corporate certificate handling, and audit logging.

Why It Works: The MCP protocol is transport-agnostic. Your custom transport handles authentication and security. The messages flowing through it are standard MCP.

Integration: Standard MCP clients can’t use this transport. But clients in your corporate environment can. They get both MCP compatibility and corporate security compliance.

Example 3: Custom Model Sampling Parameters

Scenario: Your company fine-tuned a Claude model for legal document analysis. This custom model has specialized sampling parameters not in the standard MCP specification.

Solution: Extend sampling configuration with namespaced custom parameters.

from mcp.client import Client

from mcp.types import PromptMessage

from typing import Optional

class LegalModelClient:

"""

MCP client with extensions for custom legal analysis model.

Uses standard MCP protocol with custom sampling parameters.

"""

def __init__(self, mcp_client: Client):

self.client = mcp_client

async def analyze_contract(

self,

contract_text: str,

analysis_type: str,

# Standard MCP parameters

temperature: float = 0.7,

max_tokens: int = 4096,

# Custom parameters for legal model

citation_mode: str = "detailed",

jurisdiction: str = "US",

risk_threshold: float = 0.8

) -> dict:

"""

Analyze legal contract using custom model with specialized parameters.

Standard parameters (temperature, max_tokens) work with any model.

Custom parameters (citation_mode, jurisdiction, risk_threshold)

are specific to our legal model.

"""

# Create prompt with standard MCP format

prompt = PromptMessage(

role="user",

content=f"""

Analyze this contract for {analysis_type}:

{contract_text}

Provide analysis following our legal framework.

"""

)

# Sample with custom parameters

result = await self.client.sample(

messages=[prompt],

# Standard parameters - work with any MCP server

temperature=temperature,

max_tokens=max_tokens,

# Custom parameters - namespaced to avoid conflicts

sampling_params={

"_custom_legal_model": {

"citation_mode": citation_mode,

"jurisdiction": jurisdiction,

"risk_threshold": risk_threshold,

"enable_precedent_search": True,

"statute_validation": True

}

}

)

# Response includes custom metadata

return {

"analysis": result.content,

"citations": result.metadata.get("_custom_legal_model", {}).get("citations", []),

"risk_score": result.metadata.get("_custom_legal_model", {}).get("risk_score"),

"precedents": result.metadata.get("_custom_legal_model", {}).get("precedents", [])

}

Server side handling:

from mcp.server.fastmcp import FastMCP

import anthropic

mcp = FastMCP("legal-analysis-server")

@mcp.sampling_handler()

async def handle_sampling(

messages: list[PromptMessage],

sampling_params: dict

) -> SamplingResult:

"""

Handle sampling requests with support for custom legal parameters.

Standard clients send standard parameters.

Legal clients send custom parameters in namespaced field.

"""

# Extract standard parameters

temperature = sampling_params.get("temperature", 0.7)

max_tokens = sampling_params.get("max_tokens", 4096)

# Extract custom parameters if present

legal_params = sampling_params.get("_custom_legal_model", {})

# Build prompt based on custom parameters

system_prompt = "You are a legal analysis assistant."

if legal_params:

citation_mode = legal_params.get("citation_mode", "standard")

jurisdiction = legal_params.get("jurisdiction", "US")

system_prompt += f"""

Citation Mode: {citation_mode}

Jurisdiction: {jurisdiction}

Provide citations in {citation_mode} format.

Apply {jurisdiction} legal standards.

"""

# Call custom legal model (fine-tuned Claude)

client = anthropic.Anthropic(api_key=CUSTOM_MODEL_API_KEY)

response = await client.messages.create(

model="claude-3-opus-legal-2024", # Custom fine-tuned model

system=system_prompt,

messages=[{"role": m.role, "content": m.content} for m in messages],

temperature=temperature,

max_tokens=max_tokens

)

# Include custom metadata in response

metadata = {}

if legal_params:

metadata["_custom_legal_model"] = {

"citations": extract_citations(response.content),

"risk_score": calculate_risk_score(response.content),

"precedents": find_precedents(response.content)

}

return SamplingResult(

content=response.content[0].text,

model="claude-3-opus-legal-2024",

stop_reason=response.stop_reason,

metadata=metadata

)

What’s Standard: The sampling API, message format, and core parameters.

What’s Custom: Legal-specific parameters, custom model invocation, specialized metadata.

Why It Works: Standard clients can still use your server - they just won’t get the legal-specific features. Legal clients get enhanced functionality while remaining MCP-compatible.

Graceful Degradation: If a standard client calls this server, it works fine. The custom parameters are optional. The server provides basic sampling even without them.

Common Mistakes to Avoid

Flexibility is powerful, but easy to misuse. Here are mistakes that break MCP compatibility.

Mistake 1: Modifying Core Message Format

Don’t do this:

# WRONG - Custom message format

{

"mcp-version": "1.0", # Not in JSON-RPC spec

"action": "list-tools", # Should be "method"

"correlation_id": "abc-123", # Should be "id" with number type

"timestamp": "2024-01-15T10:30:00Z" # Not a standard field

}

This breaks every standard MCP client. They expect JSON-RPC 2.0 format. Your custom format is incompatible.

Do this instead:

# CORRECT - Standard format with custom metadata

{

"jsonrpc": "2.0",

"method": "tools/list",

"id": 1,

# Custom fields in params, properly namespaced

"params": {

"_custom_company": {

"correlation_id": "abc-123",

"timestamp": "2024-01-15T10:30:00Z"

}

}

}

Keep the core structure standard. Add custom data in appropriate fields.

Mistake 2: Creating Incompatible Extensions

Don’t do this:

# WRONG - Required custom fields

@mcp.tool()

async def query_data(

query: str,

api_key: str, # Required custom field

tenant_id: str # Required custom field

):

"""This tool requires custom authentication"""

pass

Now standard clients can’t use your tool. They don’t know about api_key or tenant_id.

Do this instead:

# CORRECT - Handle auth in server, not client

class AuthenticatedMCPServer:

def __init__(self, api_key: str, tenant_id: str):

self.api_key = api_key

self.tenant_id = tenant_id

self.mcp = FastMCP("authenticated-server")

@mcp.tool()

async def query_data(self, query: str):

"""Standard tool interface, auth handled server-side"""

# Use self.api_key and self.tenant_id here

pass

Standard clients can call query_data with just query. Your server handles authentication internally.

Mistake 3: Over-Engineering Simple Needs

Don’t do this:

# WRONG - Unnecessary complexity

{

"jsonrpc": "2.0",

"method": "tools/call",

"params": {

"name": "get_user",

"arguments": {"user_id": 123},

"_custom_metadata": {

"version": "2.0",

"schema_version": "1.5",

"compatibility_mode": "strict",

"validation_level": "full",

"error_handling": "verbose",

"retry_policy": {

"max_attempts": 3,

"backoff": "exponential",

"initial_delay": 1000

},

"monitoring": {

"trace_id": "abc-123",

"span_id": "def-456",

"parent_span": "ghi-789"

}

}

}

}

That’s overkill for a simple tool call. Most of this metadata isn’t used.

Do this instead:

# CORRECT - Add what you actually need

{

"jsonrpc": "2.0",

"method": "tools/call",

"params": {

"name": "get_user",

"arguments": {"user_id": 123},

"_custom_company": {

"trace_id": "abc-123" # Only what you actually use

}

}

}

Add extensions when you have a real need. Don’t build elaborate structures “just in case.”

Mistake 4: Poor Documentation

Don’t do this:

# WRONG - Undocumented custom behavior

@mcp.tool()

async def process_data(data: str, mode: str):

"""Process some data"""

# mode="advanced" enables secret features

# mode="legacy" uses old algorithm

# mode="experimental" might crash

pass

Clients have no idea what “mode” values are valid or what they do.

Do this instead:

# CORRECT - Clear documentation

@mcp.tool()

async def process_data(

data: str,

mode: str = "standard"

) -> dict[str, any]:

"""

Process data with configurable algorithm.

Args:

data: Input data to process

mode: Processing mode. Valid values:

- "standard" (default): Balanced speed and accuracy

- "fast": Faster processing, lower accuracy

- "accurate": Slower processing, higher accuracy

- "legacy": Compatible with old data formats (deprecated)

Returns:

{

"result": processed data,

"mode_used": actual mode (may differ from requested),

"processing_time": milliseconds taken

}

Raises:

ValueError: If mode is not recognized

"""

valid_modes = ["standard", "fast", "accurate", "legacy"]

if mode not in valid_modes:

raise ValueError(f"Invalid mode. Choose from: {valid_modes}")

# Implementation

Document your extensions thoroughly. Users need to know what’s available and how to use it.

Best Practices for Extensions

Follow these patterns to extend MCP without breaking compatibility.

Practice 1: Always Namespace Custom Fields

Use a consistent prefix for all custom fields:

# CORRECT - Properly namespaced

{

"jsonrpc": "2.0",

"method": "tools/call",

"params": {

"name": "query_db",

"arguments": {

"query": "SELECT * FROM users",

# All custom fields under one namespace

"_custom_acme_corp": {

"timeout": 30,

"retry_count": 3,

"priority": "high",

"cache_ttl": 300

}

}

},

"id": 1

}

Benefits:

- Avoid conflicts with future MCP additions

- Clear which fields are standard vs custom

- Easy to filter out custom fields if needed

- Can version your namespace independently

Practice 2: Make Extensions Optional

Design so standard clients work without understanding your extensions:

@mcp.tool()

async def search_documents(

query: str,

limit: int = 10,

# Standard parameters work for everyone

) -> list[dict]:

"""

Search documents with optional advanced features.

Works with standard clients using query and limit.

Advanced clients can add custom ranking parameters.

"""

# Default behavior for standard clients

results = await basic_search(query, limit)

# Check for custom parameters

custom_params = getattr(search_documents, '_custom_params', {})

if 'ranking_model' in custom_params:

# Enhanced behavior for advanced clients

results = await rerank_results(

results,

model=custom_params['ranking_model']

)

return results

# Custom client can set this

search_documents._custom_params = {

'ranking_model': 'legal-documents-v2'

}

Standard clients get basic functionality. Advanced clients get enhancements.

Practice 3: Document Extension Points

Create clear documentation for your extensions:

"""

Custom Extensions for Legal Analysis MCP Server

================================================

This server extends standard MCP with legal-specific features.

Standard Compliance:

- Fully compatible with MCP specification 0.1.0

- All standard tools/resources/prompts work as expected

- No modifications to core protocol

Custom Extensions:

1. Legal Citation Support

- Namespace: _custom_legal.citations

- Available in: all sampling requests

- Format: See docs/citations.md

2. Jurisdiction Handling

- Namespace: _custom_legal.jurisdiction

- Valid values: US, UK, EU, AU, CA

- Affects precedent search and statute validation

3. Risk Scoring

- Namespace: _custom_legal.risk_scoring

- Returns: 0.0 (low) to 1.0 (high risk)

- Based on: contract terms, precedents, regulations

Version Compatibility:

- v1.x: Initial release

- v2.x: Added risk scoring (v1 still supported)

- v3.x: Current version

Migration Guide:

- v1 → v2: No breaking changes

- v2 → v3: Risk scoring moved to metadata

Examples:

See examples/ directory for complete code samples.

"""

Good documentation includes:

- What’s standard vs custom

- How to use extensions

- Version information

- Migration guides

- Complete examples

Practice 4: Test with Multiple Clients

Don’t just test with your own client:

import pytest

from mcp.client import Client

from your_server import create_legal_server

@pytest.mark.asyncio

async def test_standard_client_compatibility():

"""Verify server works with standard MCP client"""

server = create_legal_server()

# Use standard MCP client - no custom code

client = Client()

await client.connect_to_server(server)

# Test standard operations

tools = await client.list_tools()

assert len(tools) > 0

result = await client.call_tool(

"analyze_contract",

{"contract_text": "Sample contract"}

)

assert result["success"]

# Standard client should work without custom features

@pytest.mark.asyncio

async def test_custom_client_features():

"""Verify custom features work for advanced clients"""

server = create_legal_server()

from your_custom_client import LegalClient

client = LegalClient()

await client.connect_to_server(server)

# Test custom features

result = await client.analyze_with_citations(

contract_text="Sample contract",

citation_mode="detailed",

jurisdiction="US"

)

assert "citations" in result

assert result["jurisdiction"] == "US"

Test that:

- Standard clients can use your server

- Custom features work for advanced clients

- Graceful degradation works correctly

Practice 5: Version Your Extensions

When you change custom features, version them:

# Extension versioning in responses

{

"jsonrpc": "2.0",

"result": {

"content": "Analysis result...",

# Include version info

"_custom_legal": {

"version": "2.1.0",

"features": ["citations", "risk_scoring", "precedents"],

"deprecated": ["old_citation_format"],

"next_breaking": "3.0.0"

}

},

"id": 1

}

Clients can check version and adapt:

async def call_legal_server(client: Client):

"""Adapt to server version"""

result = await client.call_tool("analyze_contract", {...})

# Check extension version

ext_version = result.get("_custom_legal", {}).get("version")

if ext_version and ext_version >= "2.0.0":

# Use v2 features

risk_score = result["_custom_legal"]["risk_score"]

else:

# Fall back to v1 behavior

risk_score = calculate_risk_from_content(result["content"])

return risk_score

Versioning enables:

- Gradual rollout of new features

- Backward compatibility maintenance

- Clear deprecation path

- Client adaptation to server capabilities

FAQ

Can I add custom fields to standard MCP messages?

Yes, but use proper namespacing. Prefix custom fields with an underscore and your organization name (like _custom_acme_corp). This prevents conflicts with the standard protocol and other extensions. Place custom fields in the params object for requests or at the top level for responses. Never modify the required fields like jsonrpc, method, id, or result.

How do I ensure my extensions work with Claude, GPT-4, and other clients?

Build on top of standard MCP without modifying core structures. Test with multiple MCP-compatible clients. Use feature detection to handle clients that don’t support your extensions - provide basic functionality to all clients and enhanced features to those that send custom parameters. Document which clients support your custom features. When possible, make your extensions optional so standard clients still work.

What’s the process for getting custom features into the official spec?

Start by implementing your feature as a namespaced extension. Use it in production and document real-world benefits. Engage with the MCP community on GitHub to gather feedback and find others with similar needs. When you have evidence of adoption and clear use cases, submit a proposal to the MCP specification repository with documentation, example code, and justification for standardization.

How should I version my custom extensions?

Include version information in your namespaced fields using semantic versioning (major.minor.patch). Maintain backward compatibility for minor and patch versions - never break existing clients on small updates. Document breaking changes clearly for major version updates. Include version info in both requests and responses so clients can adapt to server capabilities. Consider including a deprecated field listing features being phased out and a next_breaking field indicating when breaking changes will occur.

What happens if my extension conflicts with future MCP updates?

Proper namespacing prevents most conflicts since your _custom_company fields won’t collide with new standard fields. Monitor the MCP specification repository for proposed changes. Test your extensions against new MCP versions early. If a conflict occurs, you have options: update your namespace (minor inconvenience), migrate to official features if your functionality was standardized (ideal outcome), or maintain compatibility layers supporting both old and new approaches. The namespace pattern gives you isolation from standard protocol evolution.

Conclusion

MCP succeeds where other protocols failed by defining minimal standards.

The core protocol is rigid: JSON-RPC 2.0, specific method names, standard error codes, required initialization. This rigidity enables interoperability. Every MCP client can talk to every MCP server.

But MCP leaves most things open: what tools exist, what resources are available, how transport works, what custom parameters you need. This flexibility enables innovation.

You’re not waiting for a standards committee to add your database connector. You’re not stuck with features someone else chose. You build what you need while staying compatible.

The balance works because MCP standardizes the right things:

- Message format (how we communicate)

- Method names (what operations exist)

- Session lifecycle (how connections work)

- Error handling (how failures are reported)

Everything else is yours to customize:

- Tool implementations (what your tools do)

- Resource types (what data you expose)

- Transport mechanisms (how messages travel)

- Custom parameters (what extra features you need)

This isn’t a compromise. It’s intentional design. Standardize what enables interoperability. Leave flexible what enables innovation.

Want to learn more about MCP’s architecture? Read The Core Concepts of MCP Explained with Examples next for a deeper dive into tools, resources, prompts, and sessions.

Built something interesting with MCP extensions? We’d love to hear about it. Share your implementation or ask questions in the comments.

About Angry Shark Studio

Angry Shark Studio is a professional Unity AR/VR development studio specializing in mobile multiplatform applications and AI solutions. Our team includes Unity Certified Expert Programmers with extensive experience in AR/VR development.

Related Articles

More Articles

Explore more insights on Unity AR/VR development, mobile apps, and emerging technologies.

View All Articles